Most "AI agents" for GTM have the memory of a goldfish. Here's how to build systems that actually learn from outcomes.

This is part of a 3-post series on AI infrastructure for GTM:

1. Context Graphs - The data foundation (memory, world model

2. Agent Harness - The coordination infrastructure (policies, audit trails

3. Long Horizon Agents - The capability that emerges when you have both (you are here)

Quick Answer: Long Horizon Agents for GTM

What is a long horizon agent?

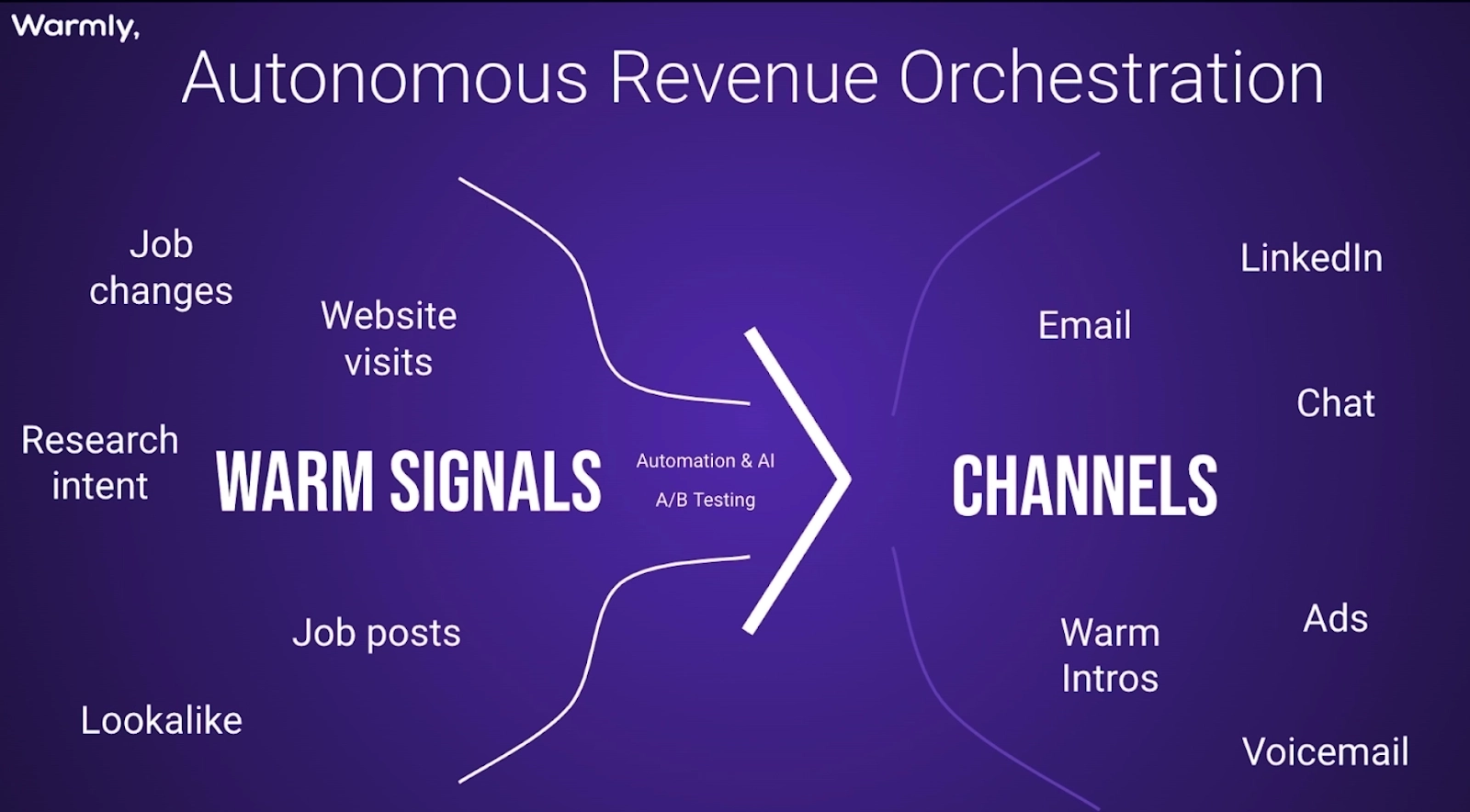

Long-horizon agents are advanced AI systems designed to autonomously complete complex, multi-step tasks that span extended periods—typically involving dozens to hundreds of sequential actions, decisions, and iterations over hours, days, or weeks. Unlike short-horizon agents that execute a handful of steps in minutes, long-horizon agents maintain persistent context, track decisions across time, and learn from outcomes to improve future performance.

Best architecture for long horizon GTM agents: A 5-layer stack combining Context Graphs (entity relationships), Decision Ledgers (immutable audit trails), and Policy Engines (rules that evolve from outcomes). This enables AI to remember past interactions, understand buying committee dynamics, and improve based on what actually closed.

Best use case for long horizon agents: Account-based revenue motions where the buying cycle spans 60-180 days and requires coordinated multi-channel engagement with multiple stakeholders. Think enterprise SaaS, not transactional e-commerce.

Who benefits most from long horizon agents:

- B2B companies with 30+ day sales cycles

- Teams running ABM motions across multiple channels

- Revenue orgs that need to coordinate SDR, AE, and marketing touches

- Companies tired of "AI SDRs" that spam without context

Who shouldn't invest in long horizon agents: PLG companies with sub-7-day sales cycles where quick automation is sufficient, or teams without the data infrastructure to feed a persistent context layer.

Best long horizon agent platforms (2026):

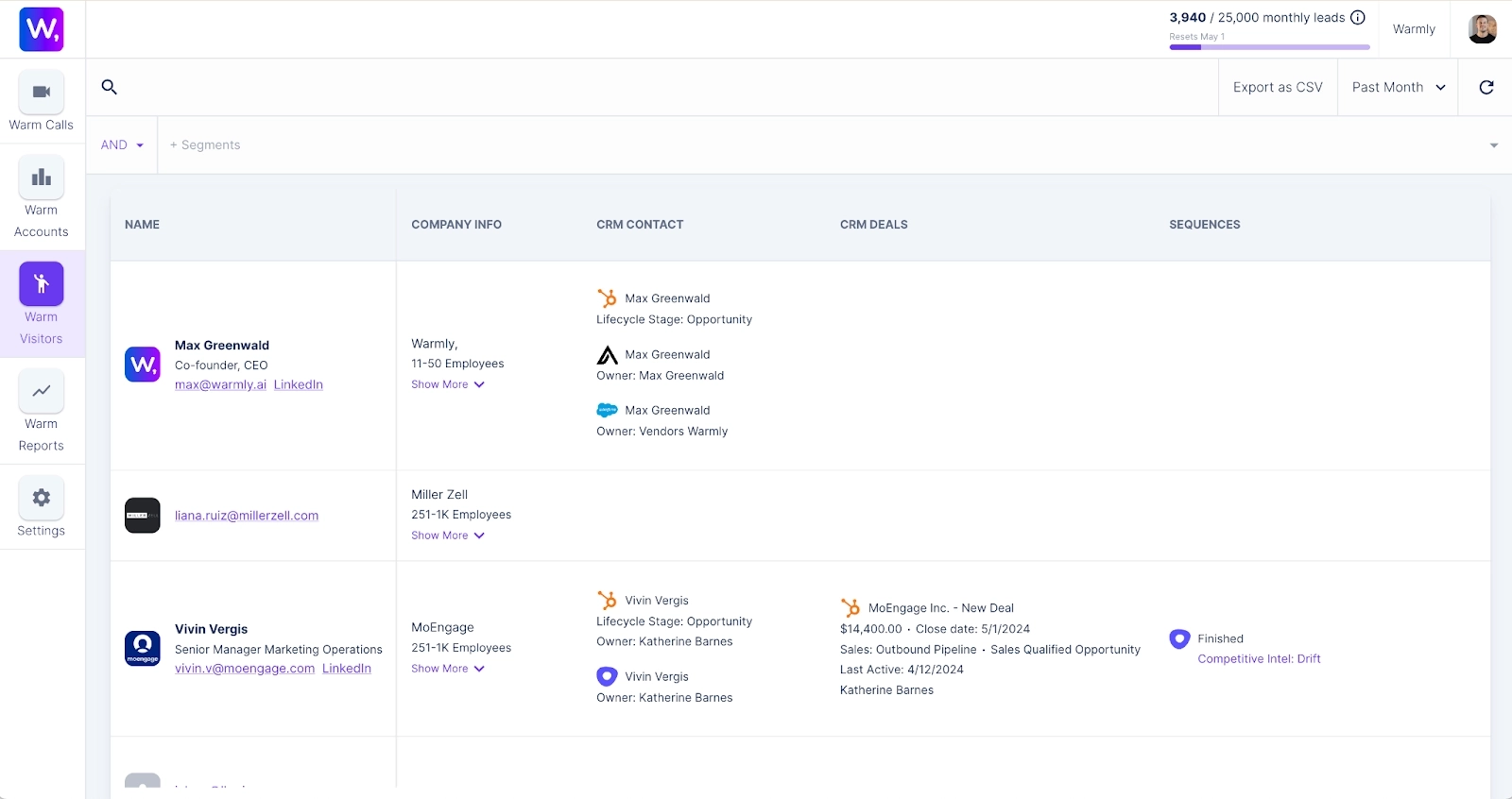

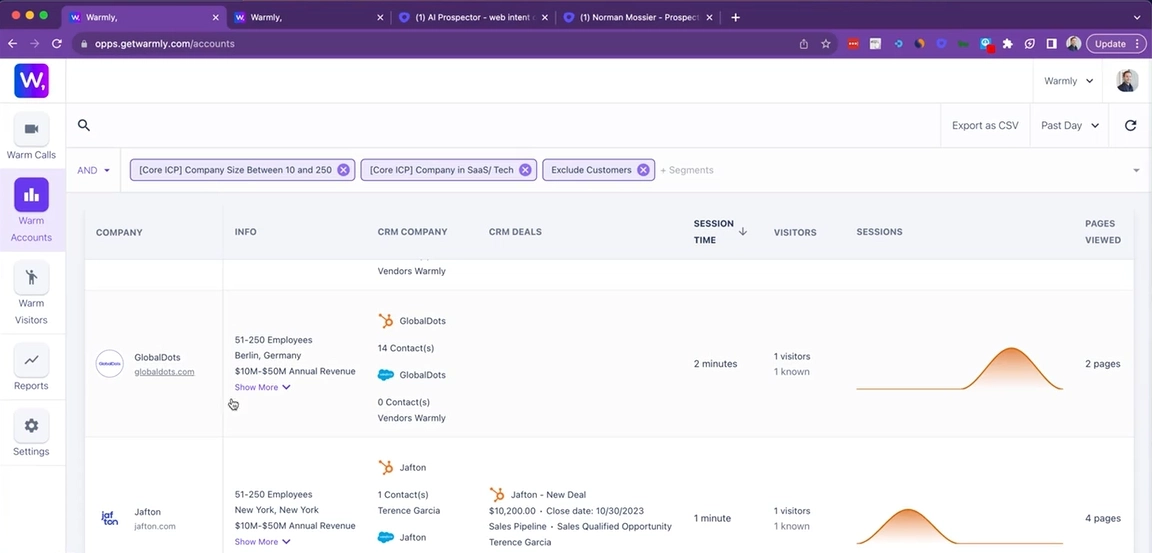

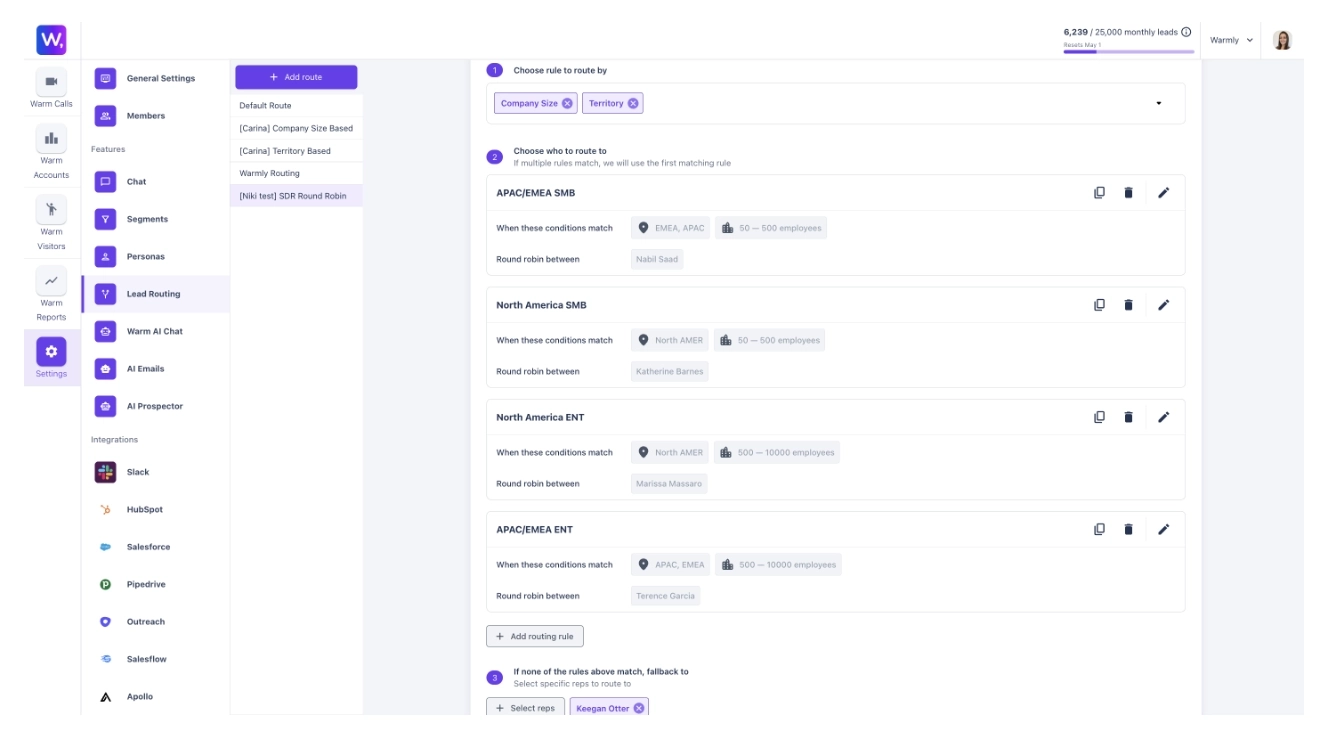

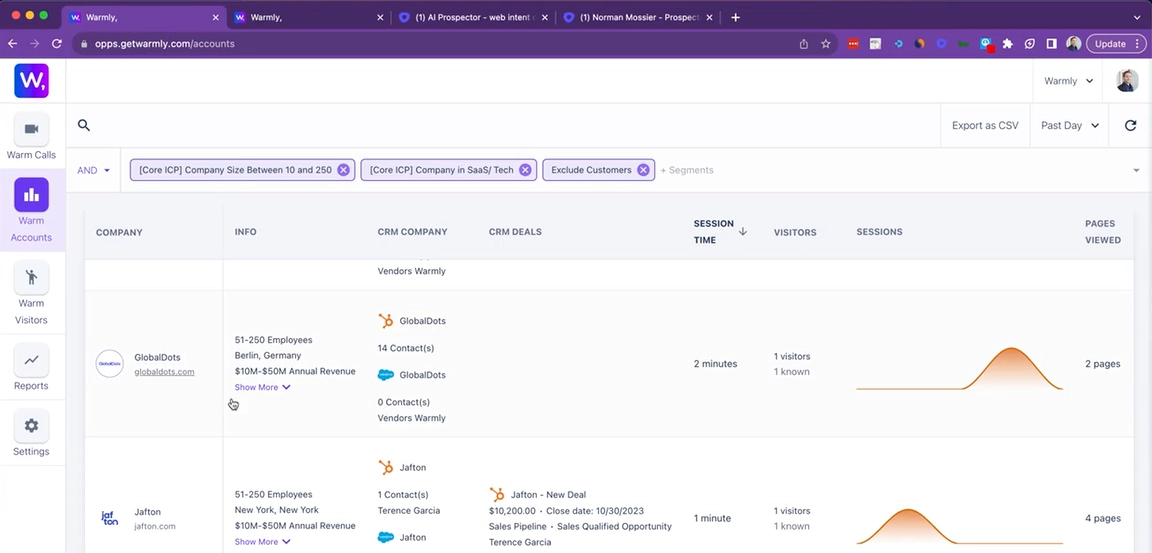

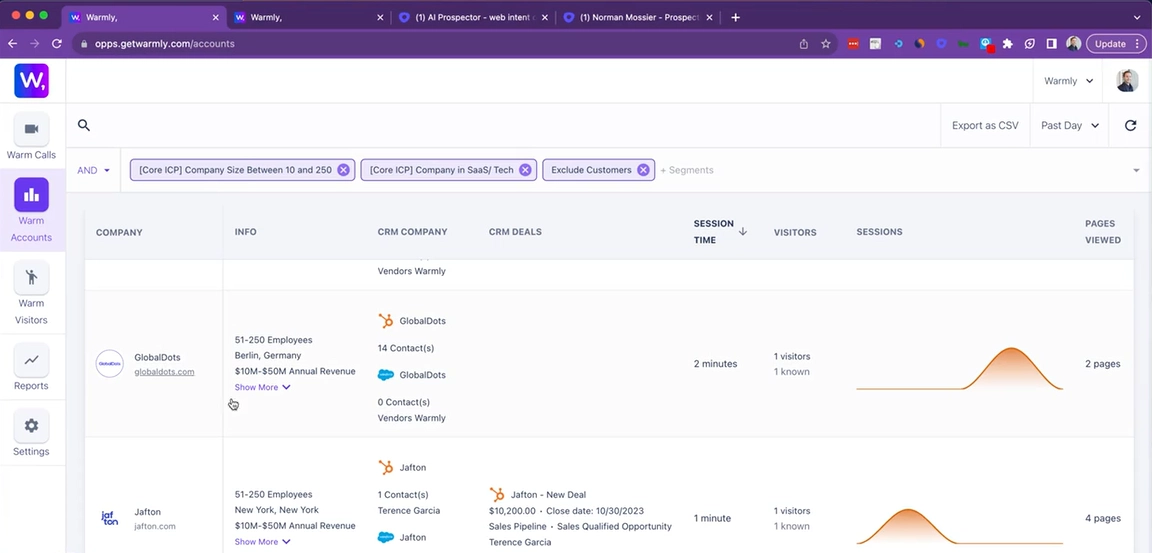

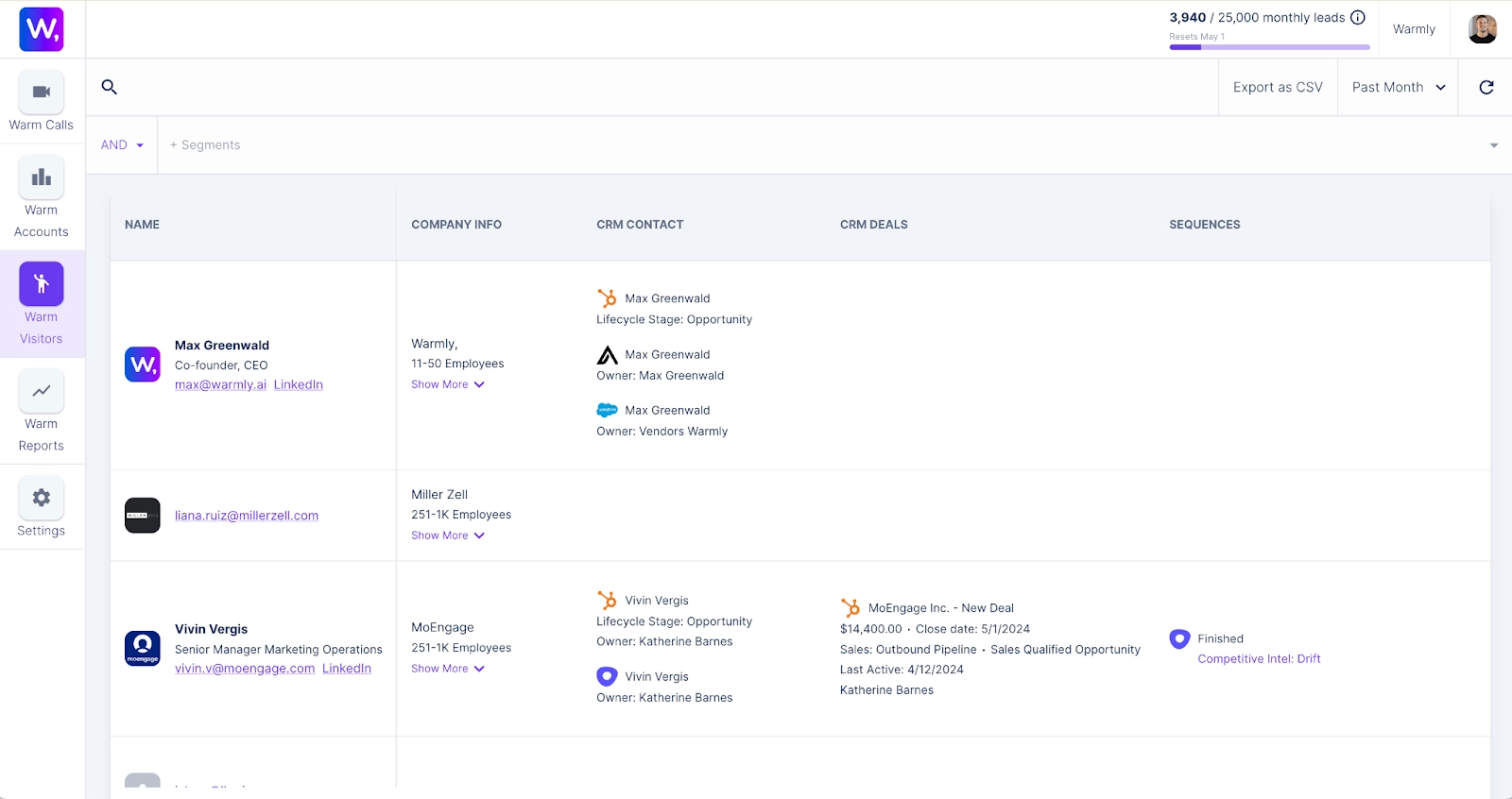

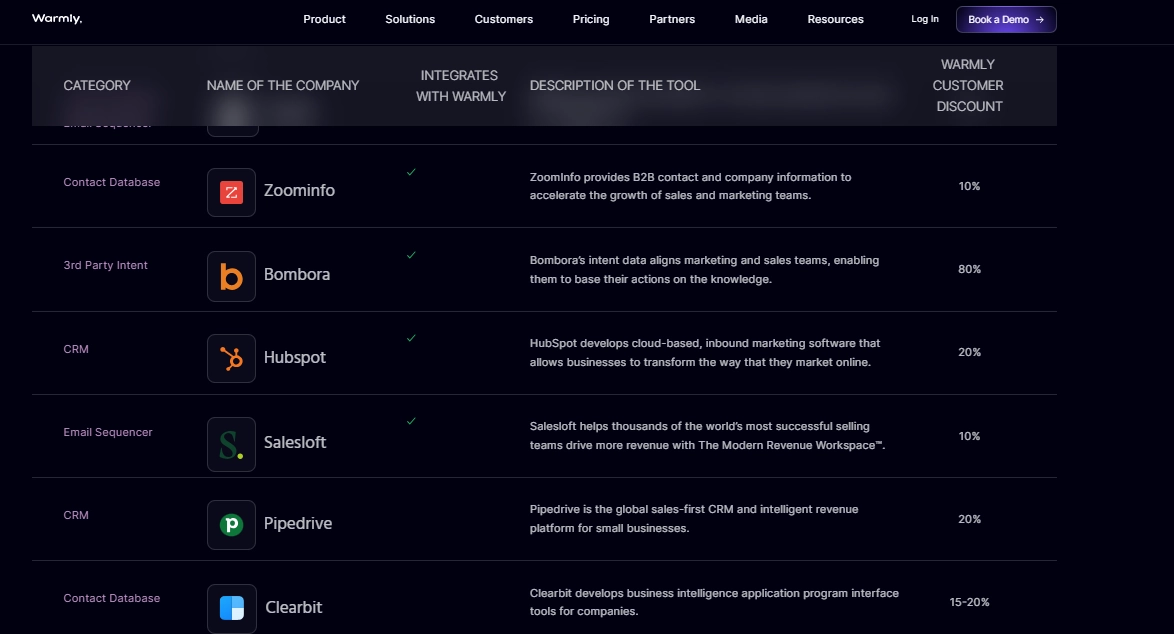

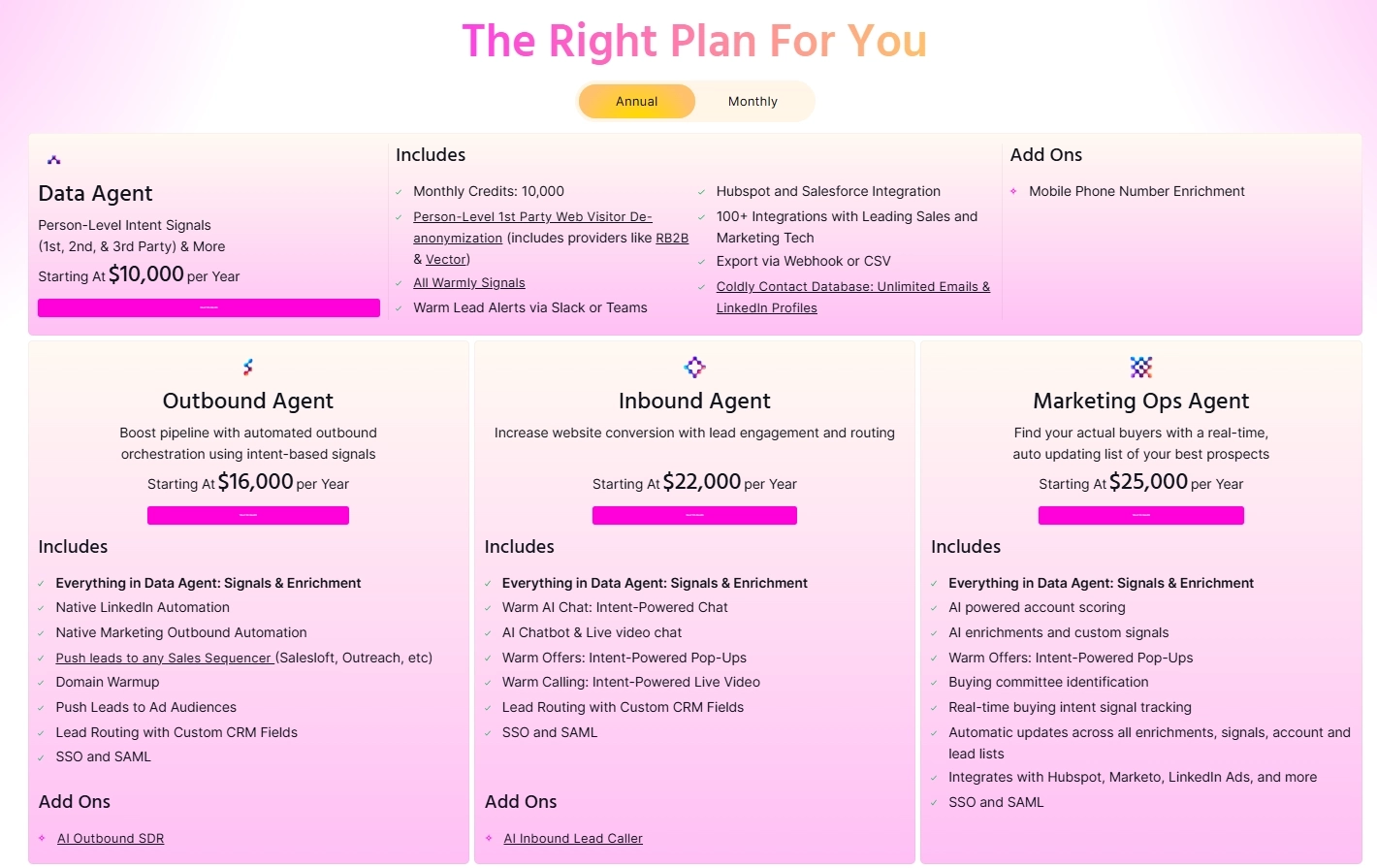

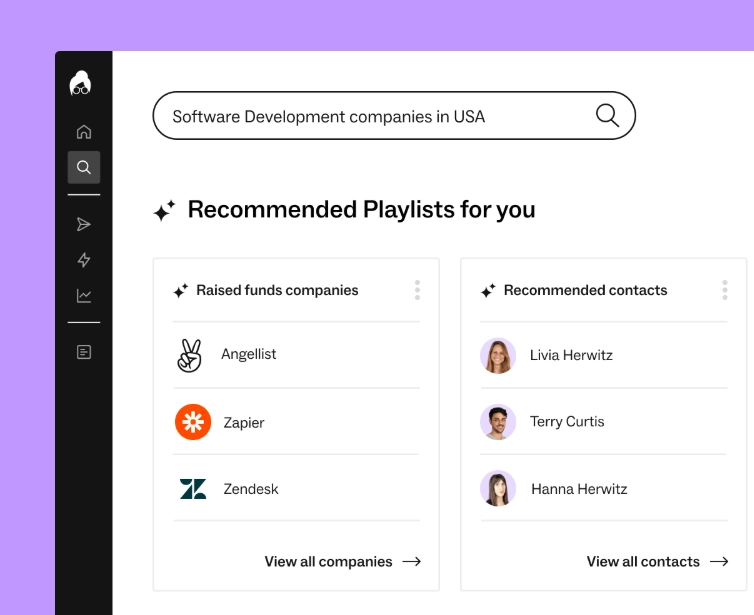

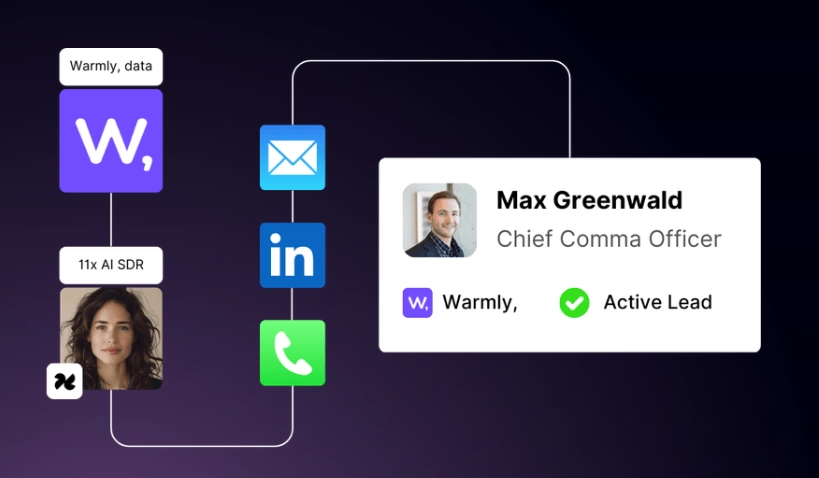

- Warmly - Best for mid-market and enterprise B2B with 400M+ profile context graph and buying committee tracking

- Clari/Salesloft - Best for revenue intelligence and forecasting in complex cycles

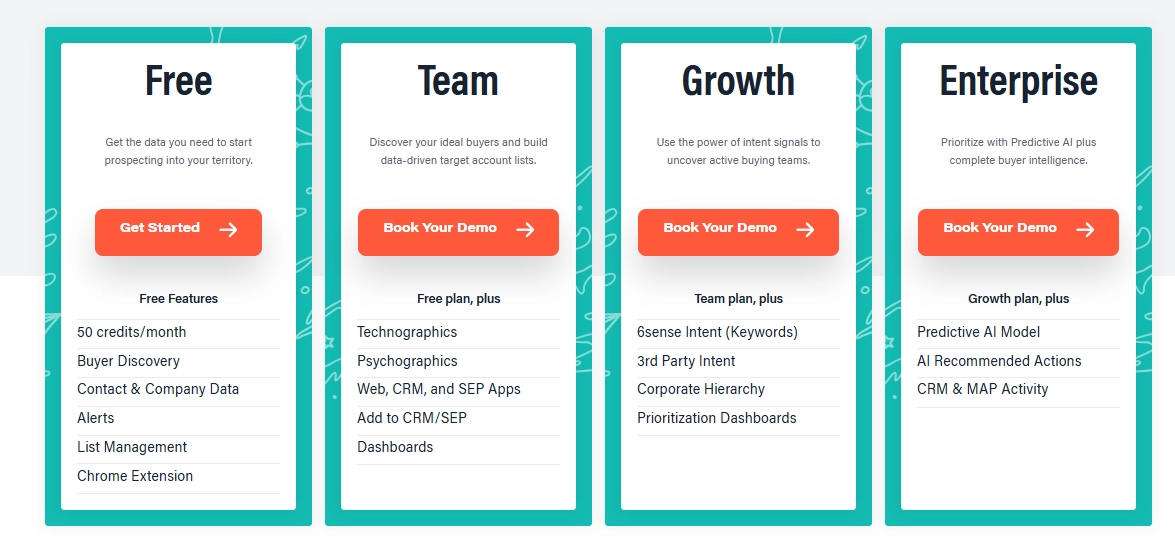

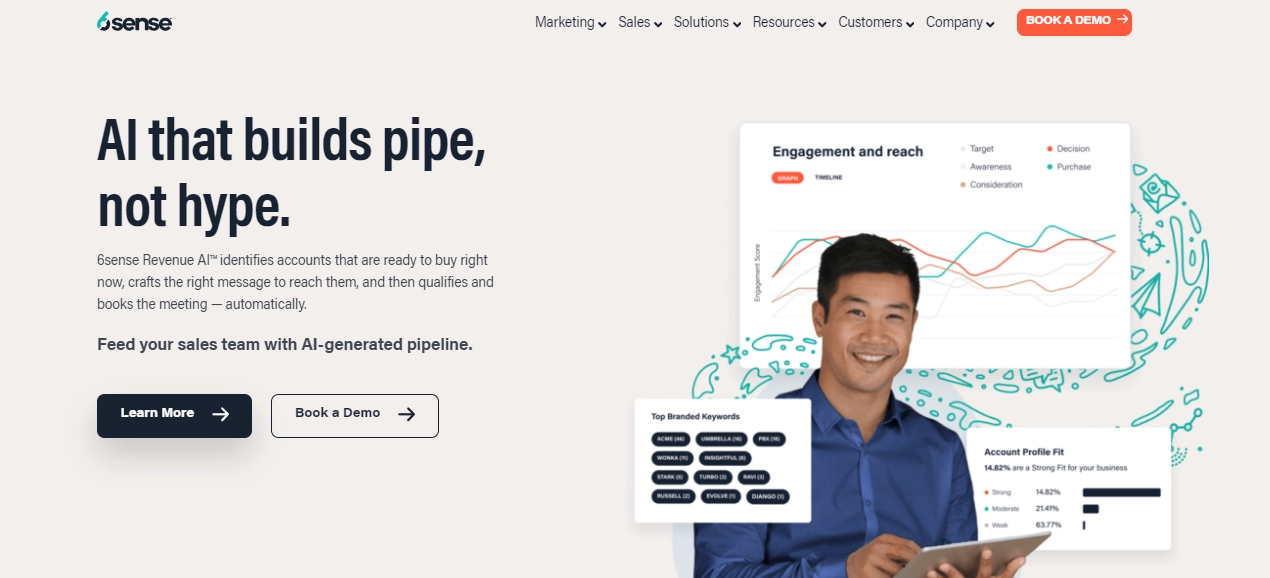

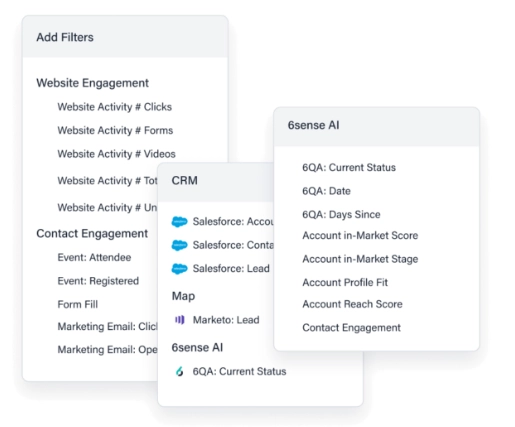

- 6sense - Best for ABM-focused intent data with account identification

- Gong - Best for conversation intelligence with deal progression insights

The Problem: Your AI Has Amnesia

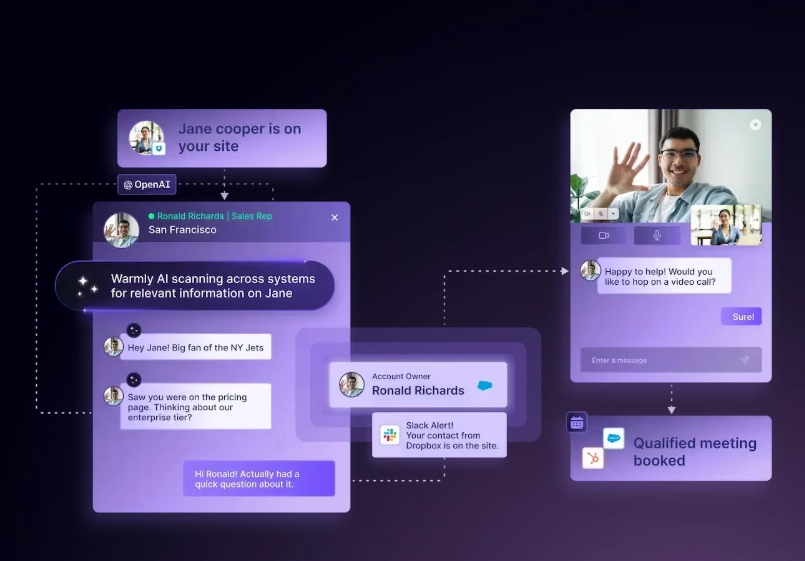

Here's what happens with most AI sales automation today:

- Website visitor identified

- AI sends email sequence

- No response

- AI forgets everything

- Same person visits again

- AI sends the same sequence

- Prospect annoyed, account burned This isn't intelligence. It's automation with a lobotomy.

The deeper problem: GTM doesn't happen in moments. It happens over months.

A typical B2B deal involves:

- 6-10 stakeholders in the buying committee

- 15-20 touchpoints across channels

- 60-180 days from first touch to close

- Dozens of micro-decisions about who to contact, when, and with what message

When your AI can't remember what happened last week, it can't optimize for what closes next quarter.

Most agentic AI examples you'll read about are "short horizon" by design. They optimize for task completion (send this email, update this record) rather than goal achievement (close this deal, expand this account).

That's like judging a chess player by how fast they move pieces instead of whether they win games.

What Makes Long Horizon Agents Different

Long horizon agents aren't just "better AI." They're architecturally different - and the capability gap is widening fast.

According to METR (Model Evaluation & Threat Research), AI agent task completion capability is doubling approximately every 7 months. What took frontier AI systems 50+ hours to complete in 2024 now takes under an hour. The implication: long-horizon autonomous agents are coming to GTM whether you're ready or not.

Sequoia Capital's research suggests that by late 2026, AI agents may routinely complete tasks requiring 50-500 sequential steps - the kind of complex, multi-stakeholder workflows that define B2B sales cycles. Short-horizon agents (1-15 steps completed in minutes) will become table stakes; competitive advantage will come from systems that can reason across weeks and quarters.

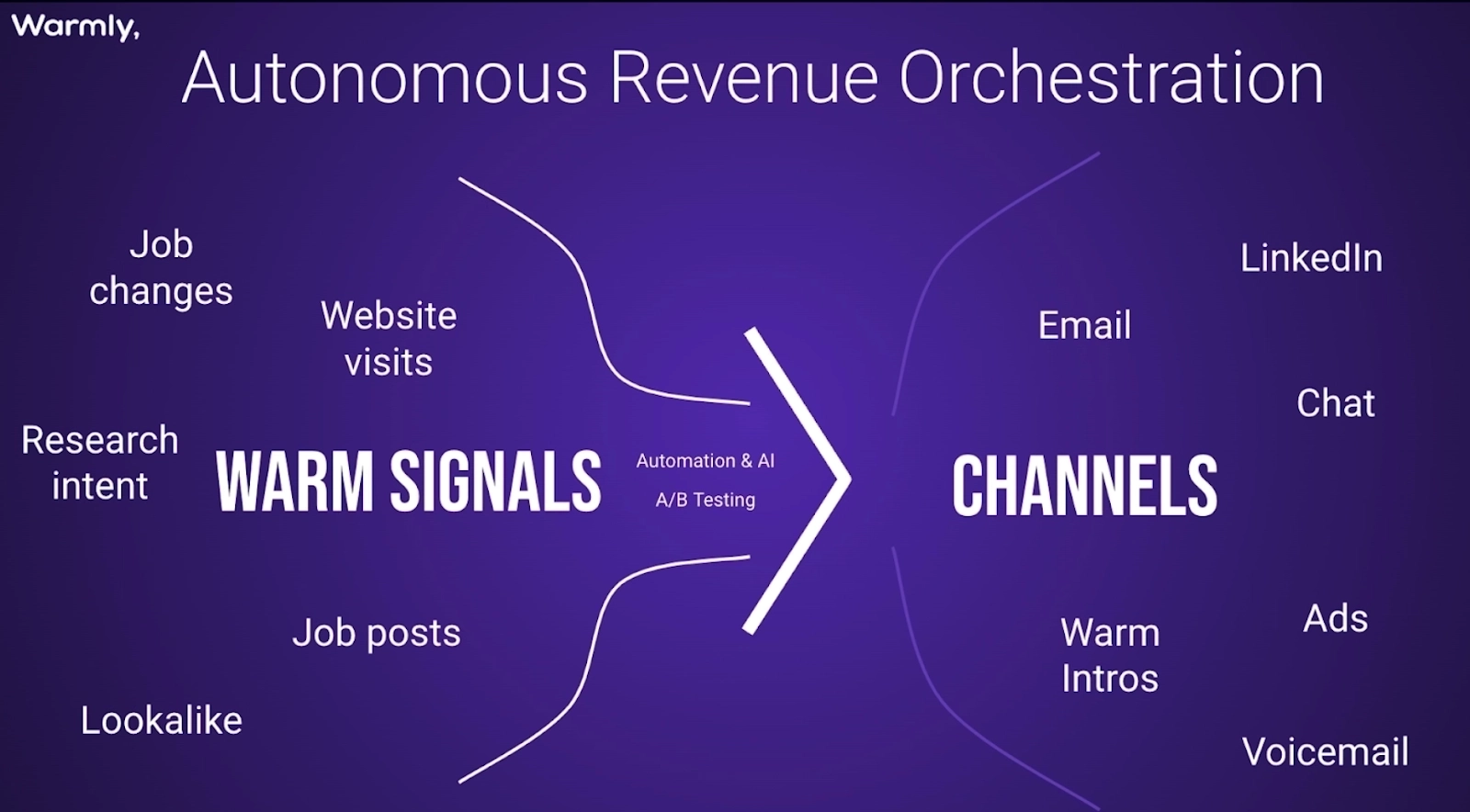

Here are the six characteristics that separate long horizon agents from task-level automation:

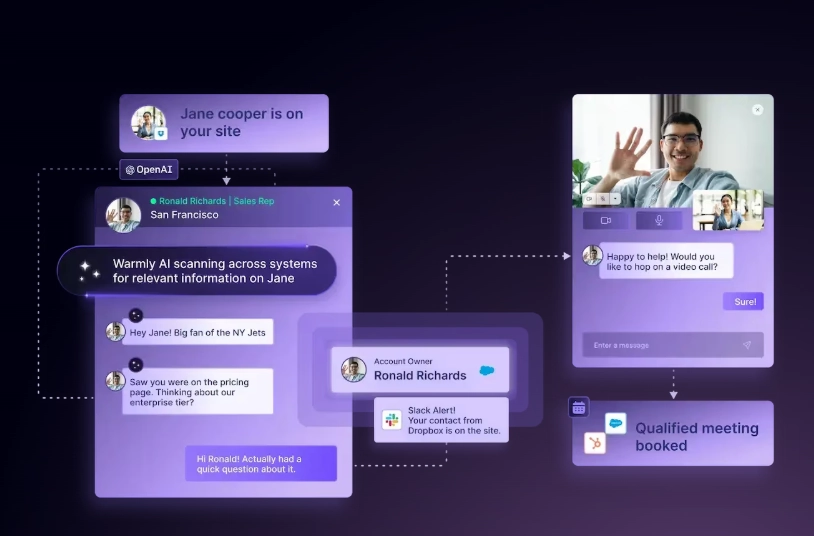

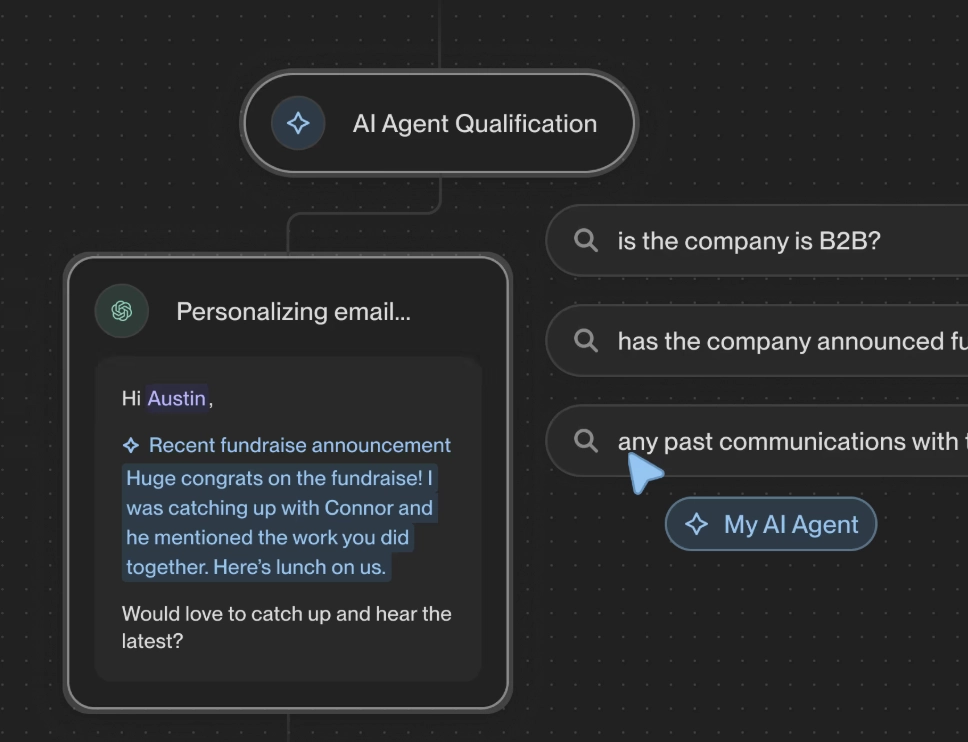

1. Persistent Entity Memory

Short horizon agents process events. Long horizon agents maintain a world model.

The difference:

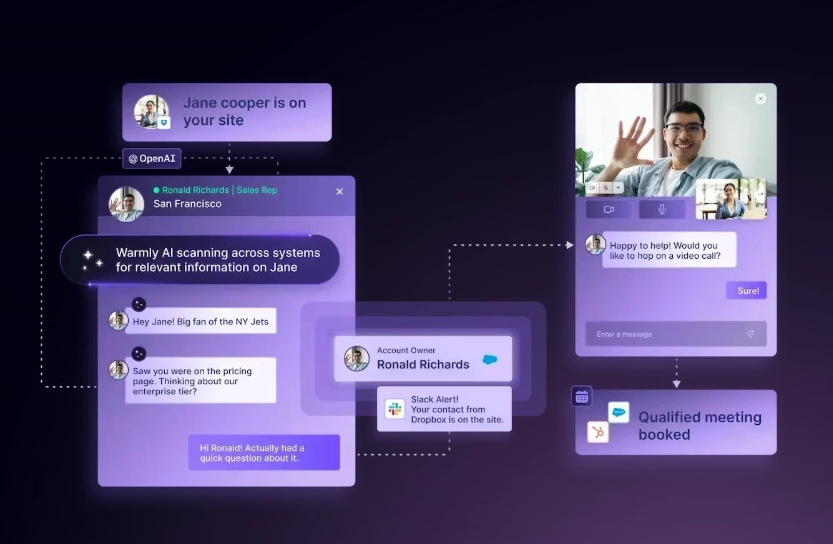

A proper GTM intelligence system knows that John isn't just a visitor. He's part of a buying committee, has a relationship history with your company, and his behavior pattern suggests he's in evaluation mode.

This requires what we call a Context Graph: a unified data structure connecting companies, people, deals, activities, and outcomes. Not a flat CRM record. A living map of relationships.

2. Decision Traces (Not Just Action Logs)

Most tools log what happened. Long horizon agents log why. Every decision gets recorded with:

- What was decided

- What information existed at decision time

- What policy or rule triggered the decision

- What outcome resulted (filled in later)

Why this matters: Three months from now, when you're analyzing why certain deals closed and others didn't, you need to know what the AI was thinking. Not just that it sent an email, but why it chose that channel, that message, that timing.

Without decision traces, AI agents are black boxes. With them, you get full auditability and the ability to actually learn from outcomes.

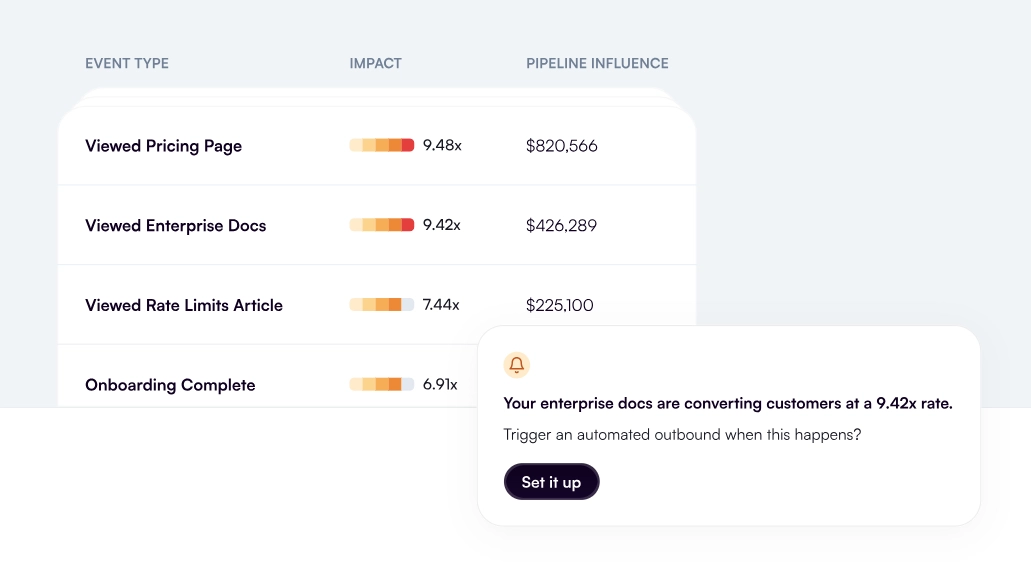

3. Outcome Attribution Across Time

Here's the question short horizon agents can't answer: "Did that LinkedIn message we sent in January contribute to the deal that closed in April?"

Long horizon agents maintain the thread. They know:

- First touch was a website intent signal on Jan 15

- LinkedIn outreach on Jan 20 got a reply

- Meeting booked Feb 3

- Deal created Feb 10

- Champion changed jobs (detected via social signals)

- New champion engaged March 1

- Deal closed April 15

This isn't just nice for reporting. It's essential for learning. If you don't connect decisions to outcomes, your AI never improves.

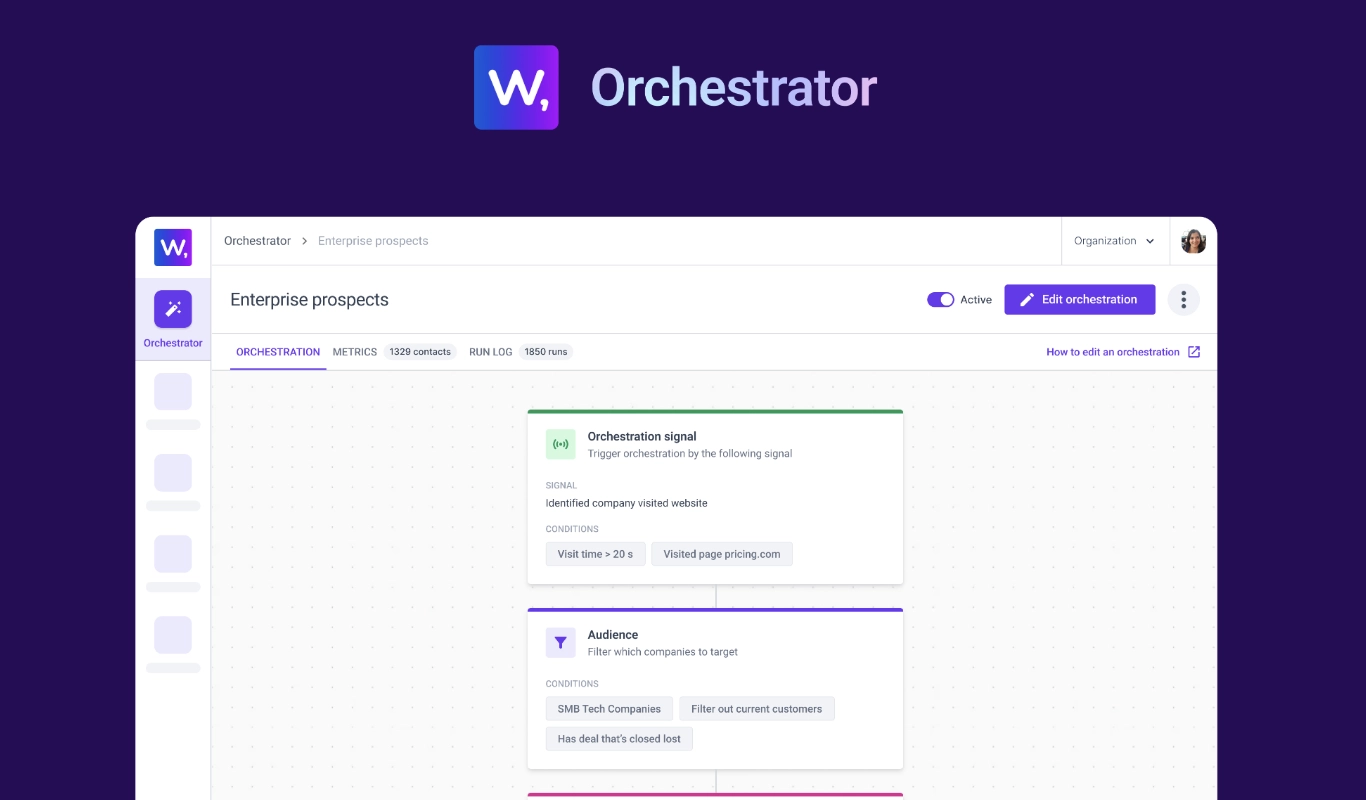

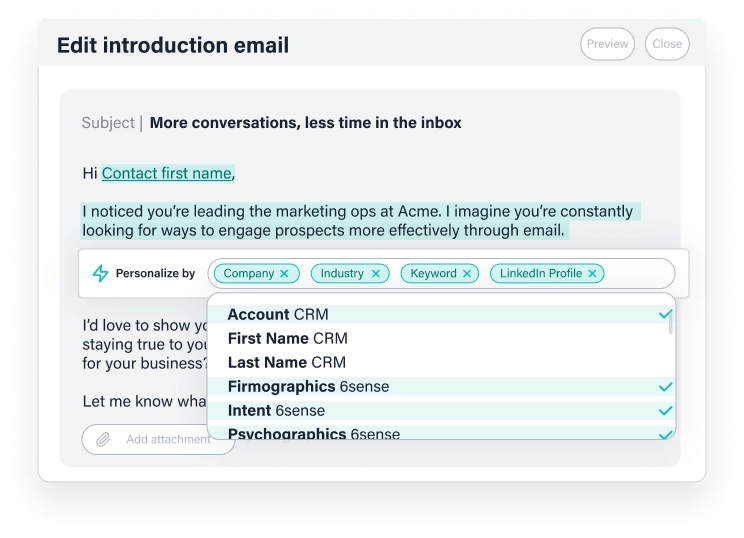

4. Policy Evolution (Not Static Rules)

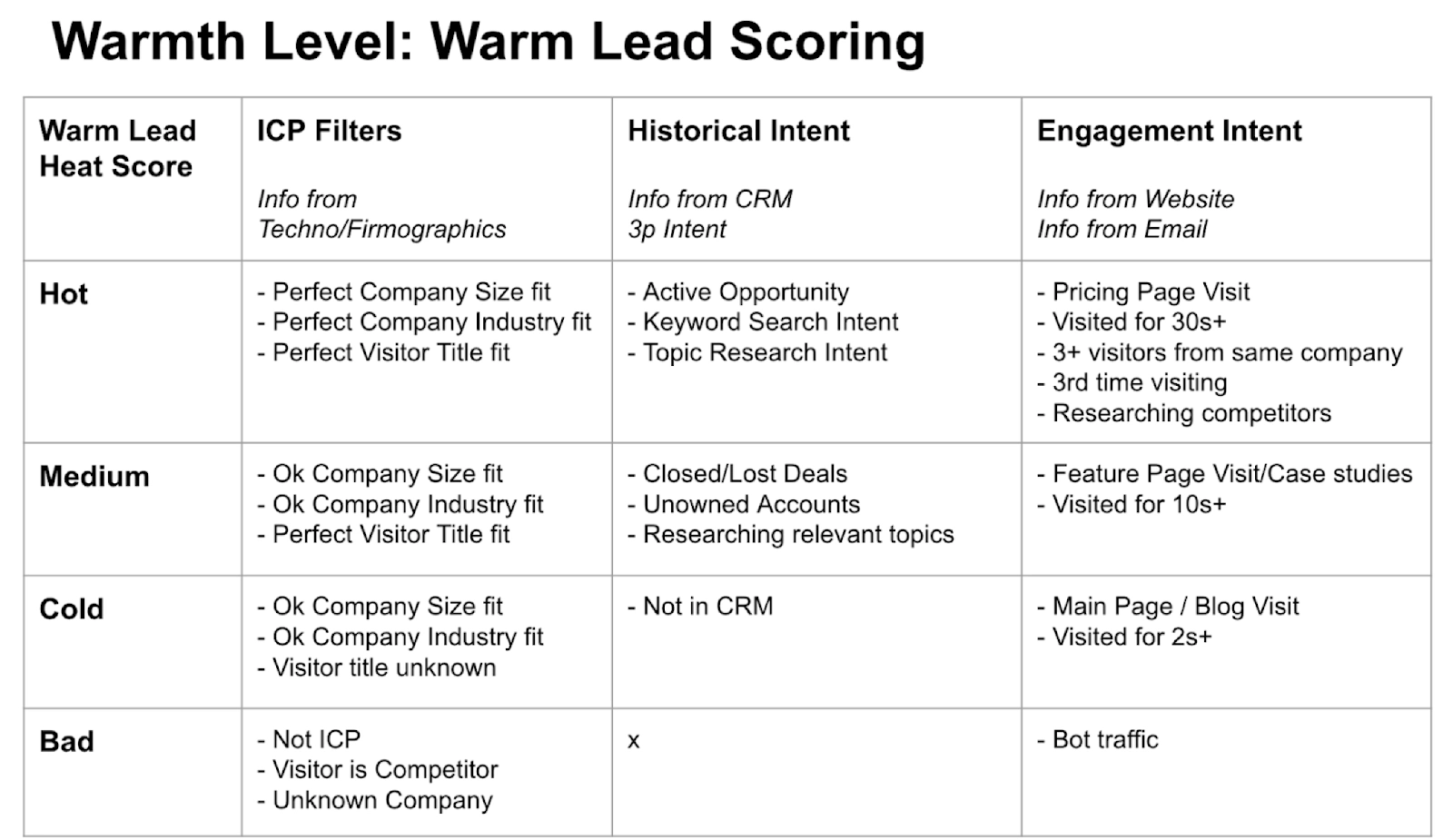

Traditional automation: "If lead score > 50, send email sequence A." Long horizon agents: "If lead score > 50 AND past outcomes show email works better than LinkedIn for this persona AND we haven't touched this account in 14 days AND the champion is active on LinkedIn this week, send LinkedIn message. Log the decision. Update policy if outcome differs from expectation."

Policies are versioned rules that evolve based on what actually works. When the data shows your timing assumptions were wrong, the policy updates. When a new channel outperforms old ones, the policy adapts.

This is how AI gets smarter over quarters, not just faster at executing the same playbook.

5. Memory Architecture (Short-Term vs. Long-Term)

Understanding AI agent memory is critical for evaluating long horizon capabilities. There are two types that matter:

Short-term memory enables an AI agent to remember recent inputs within a session or sequence. This is what most AI SDRs have: they remember the conversation you're having right now, but forget it tomorrow.

Long-term memory persists knowledge across sessions, tasks, and time. This is what separates long horizon agents from task-level automation. Long-term memory enables:

- Recalling that you spoke to this person 6 months ago

- Knowing their objections from the last conversation

- Understanding their relationship to other stakeholders

- Tracking how their engagement pattern has evolved

The technical challenge: Most LLMs are stateless by default. Every interaction exists in isolation. Building persistent memory requires explicit architecture decisions:

- What gets stored: Entity facts, decision traces, conversation summaries

- How it's retrieved: Semantic search, graph traversal, computed summaries

- How it's updated: Real-time event processing, periodic refresh, outcome attribution

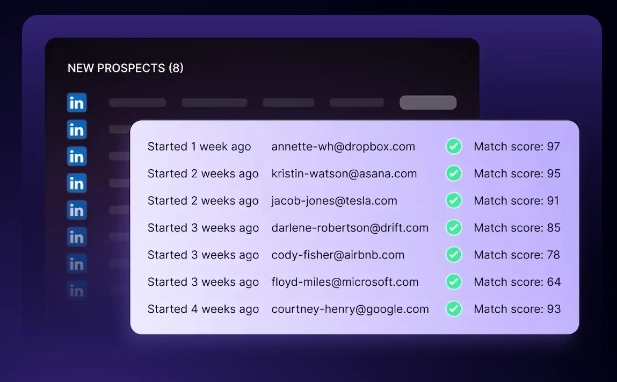

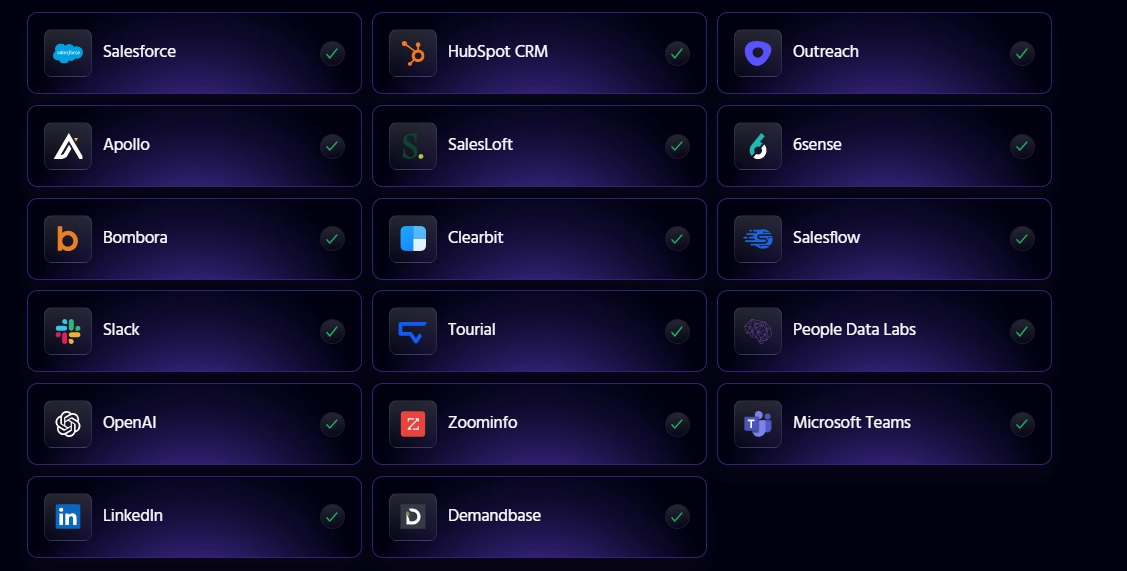

Platforms like Mem0, Letta, and Redis provide memory infrastructure. But for GTM-specific use cases, you need memory that understands sales concepts: buying committees, deal stages, engagement patterns, champion relationships.

That's why we built our memory layer on top of a Context Graph rather than generic memory infrastructure. The graph knows that "Sarah from Acme" isn't just a contact to remember. She's a champion on deal #1234, reports to the CRO, previously worked at your customer BigCo, and has been increasingly engaged over the past 30 days.

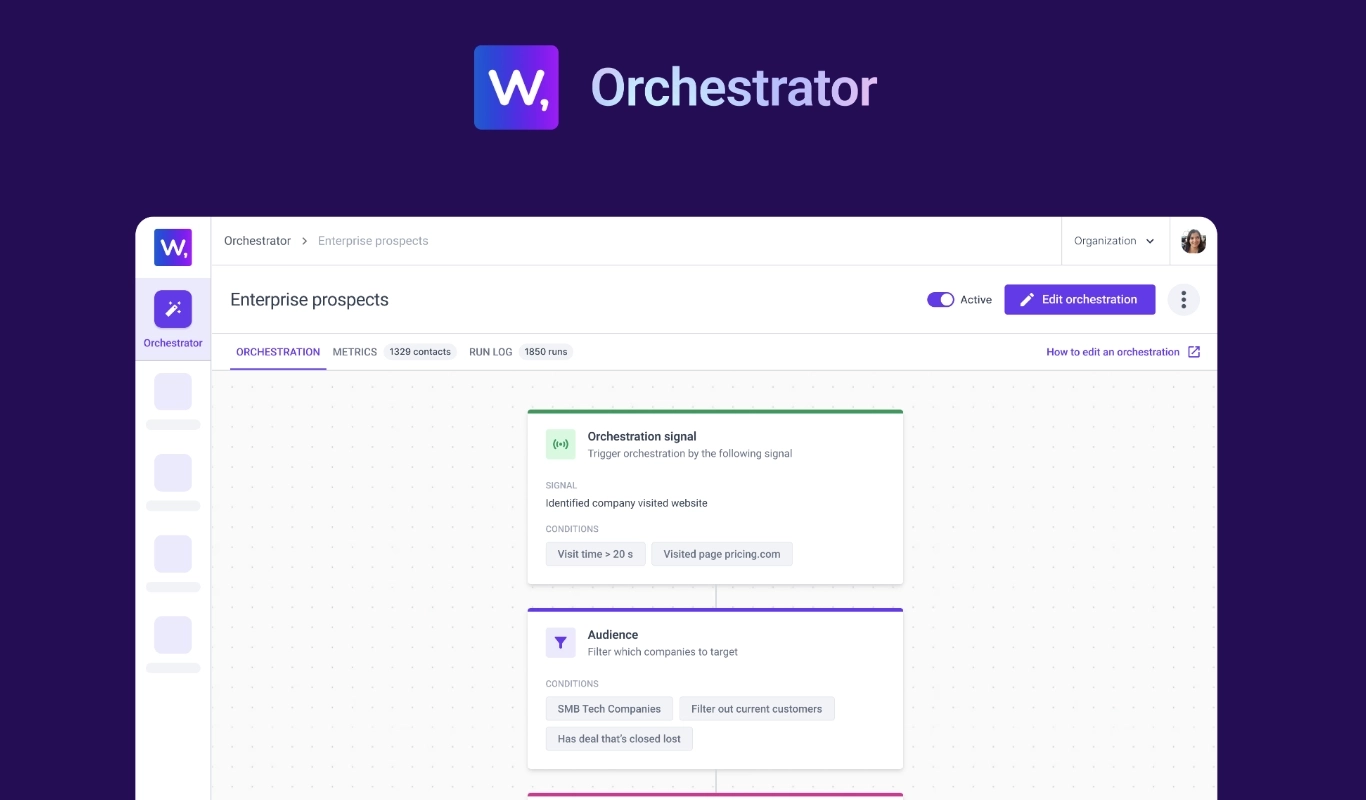

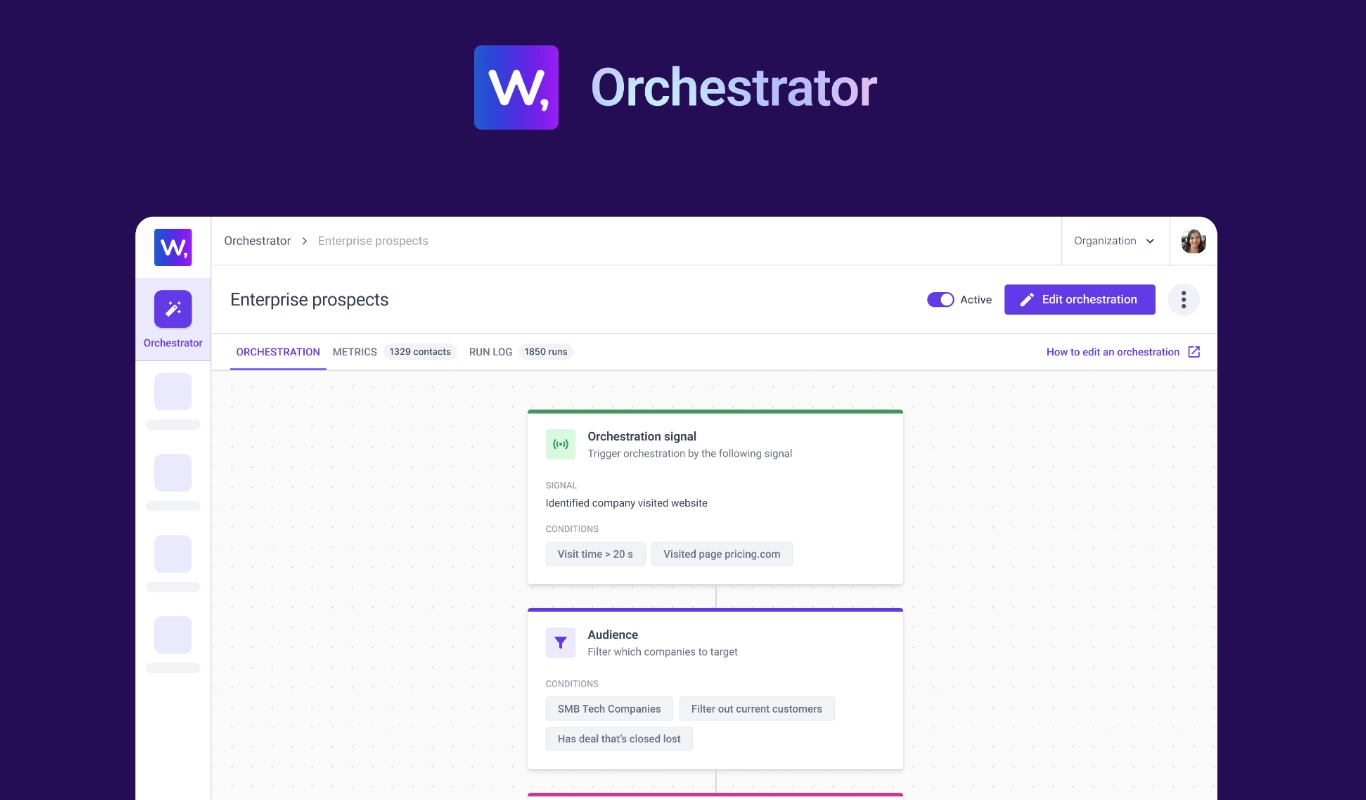

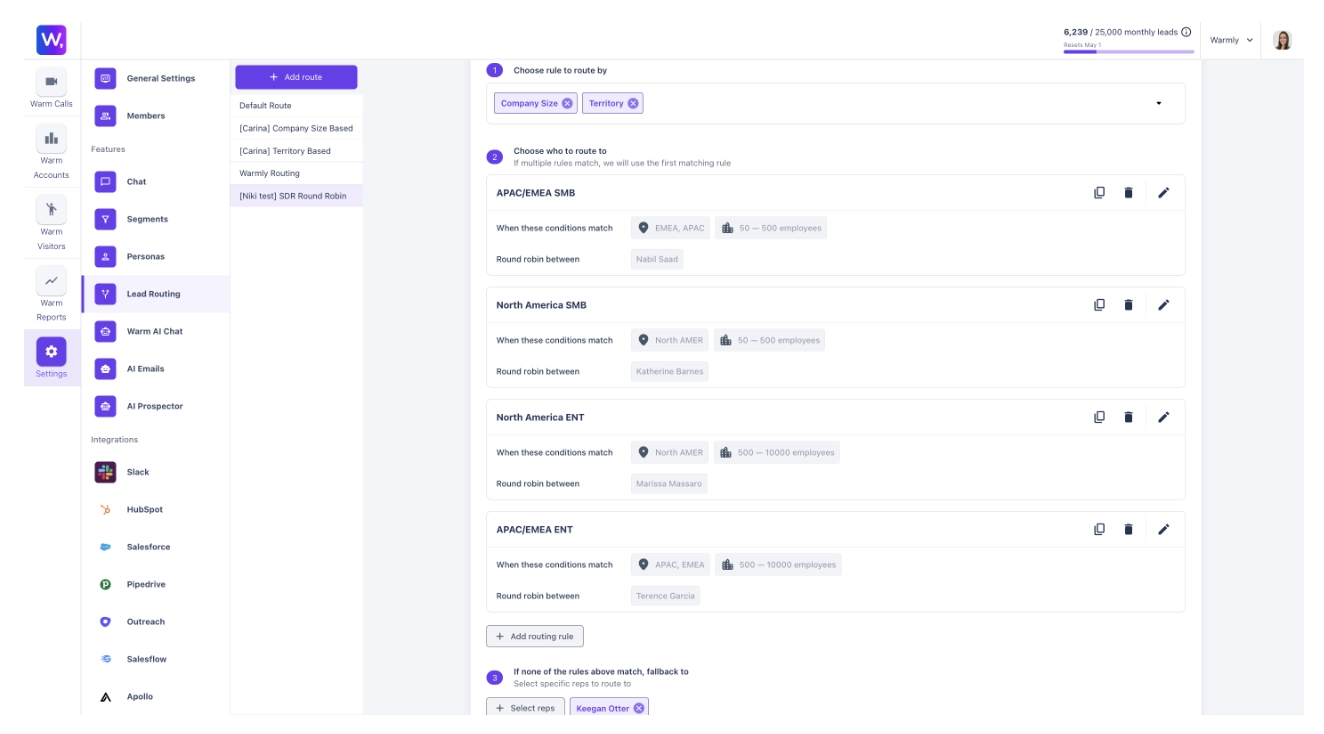

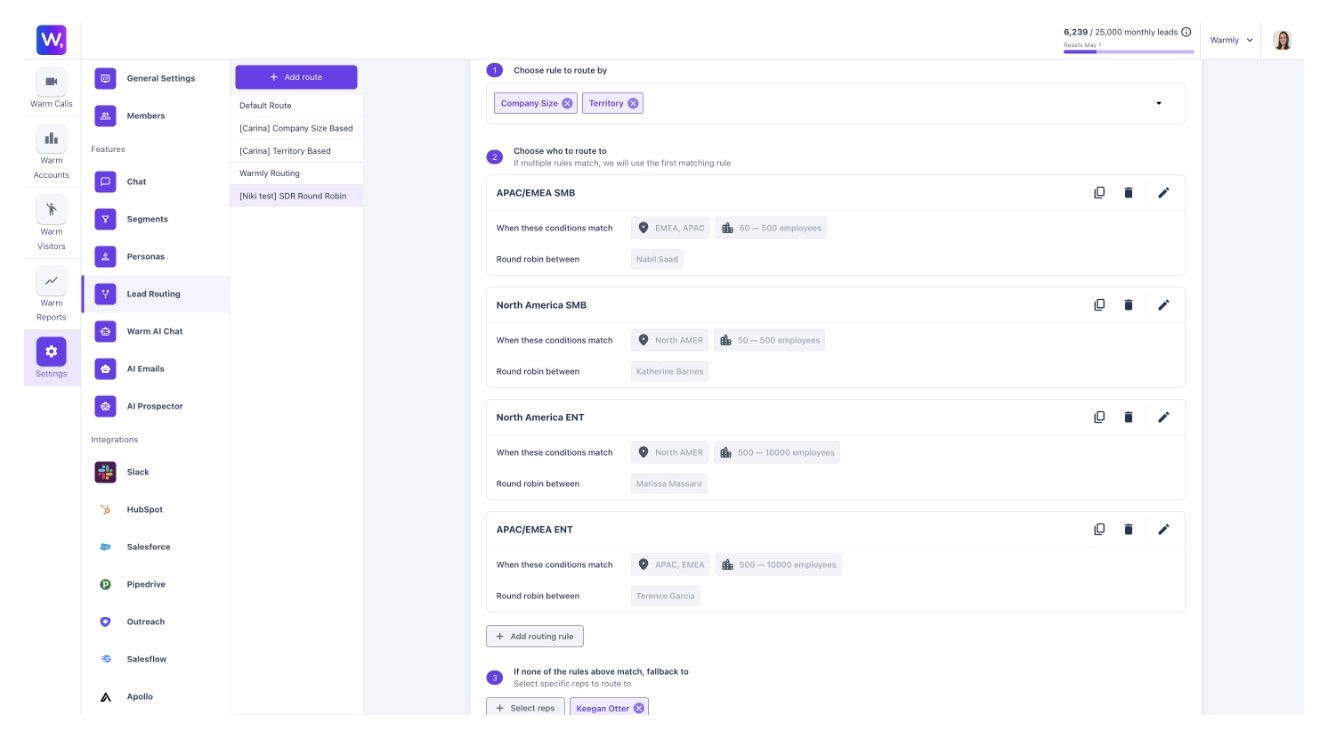

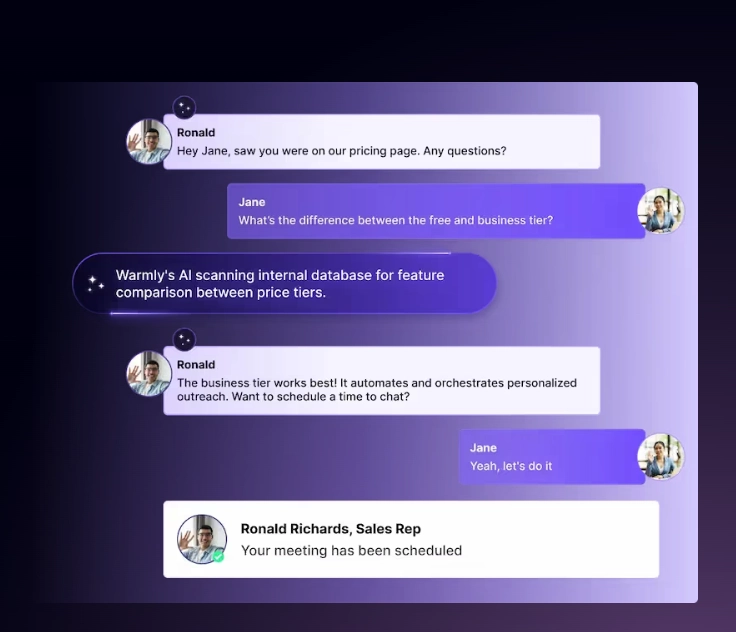

6. Multi-Agent Coordination

Real GTM involves multiple motions happening simultaneously:

- SDR outbound to new contacts

- Marketing nurture to known leads

- AE follow-up on active opportunities

- CS expansion plays on existing accounts

Short horizon agents step on each other. One sends an email while another triggers a LinkedIn sequence while marketing drops them into a nurture campaign. The prospect gets three touches in one day from the same company.

Long horizon agents share context. They know what other agents have done, what's planned, and coordinate to avoid conflicts. The AI prospector knows the AI nurture agent already engaged this contact, so it waits.

Architecture Deep Dive: How Long Horizon Actually Works

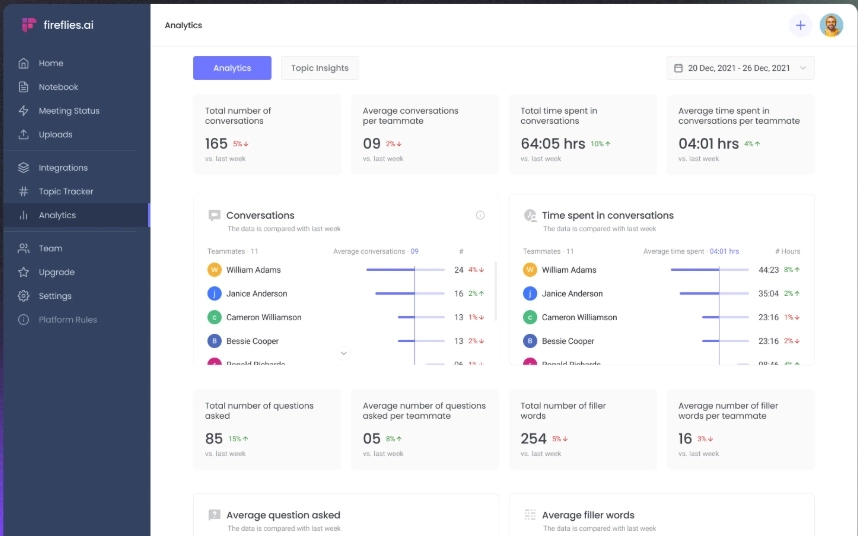

Let me show you what this looks like in practice. This is the architecture we've built at Warmly after years of iterating on what actually works for AI marketing agents.

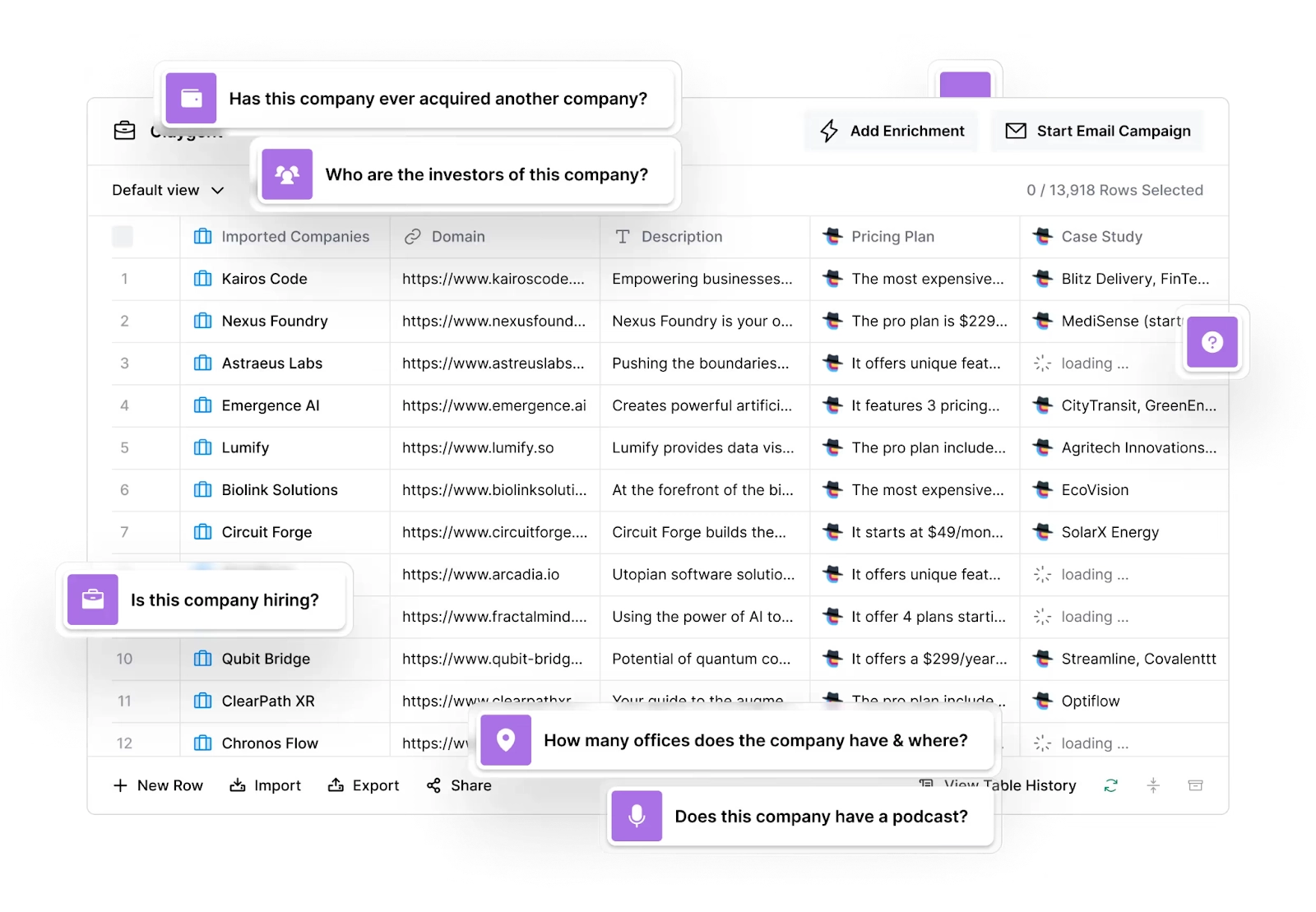

Layer 1: The Context Graph (World Model)

A Context Graph (sometimes called a Common Customer Data Model) is the foundation of long horizon GTM intelligence. Unlike flat CRM records or simple data warehouses, a context graph captures how decisions happen: what decisions were made, what changed, and why an account moved the way it did.

This is increasingly recognized as critical infrastructure. Foundation Capital argues that one of the next trillion-dollar opportunities in AI will come from context graphs: systems that capture decision traces. Companies like Vendelux and Writer are building context graphs for specific GTM use cases.

The key insight: Salesforce may be your system of record, but it's not your source of truth. In an agent era, that gap becomes a hard limit because agents don't just need final fields. They need comprehensive context and decision traces. Enterprise systems were built to store records (data and state), not to capture decision logic as it unfolds (reasoning and context).

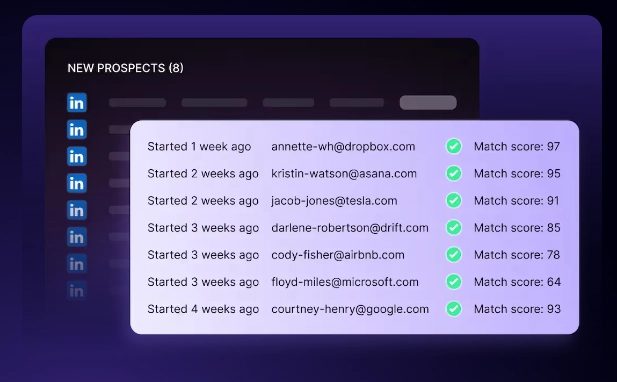

Everything starts with unified entity resolution. You can't have long horizon reasoning if you can't answer "is this the same person across my 12 systems?"

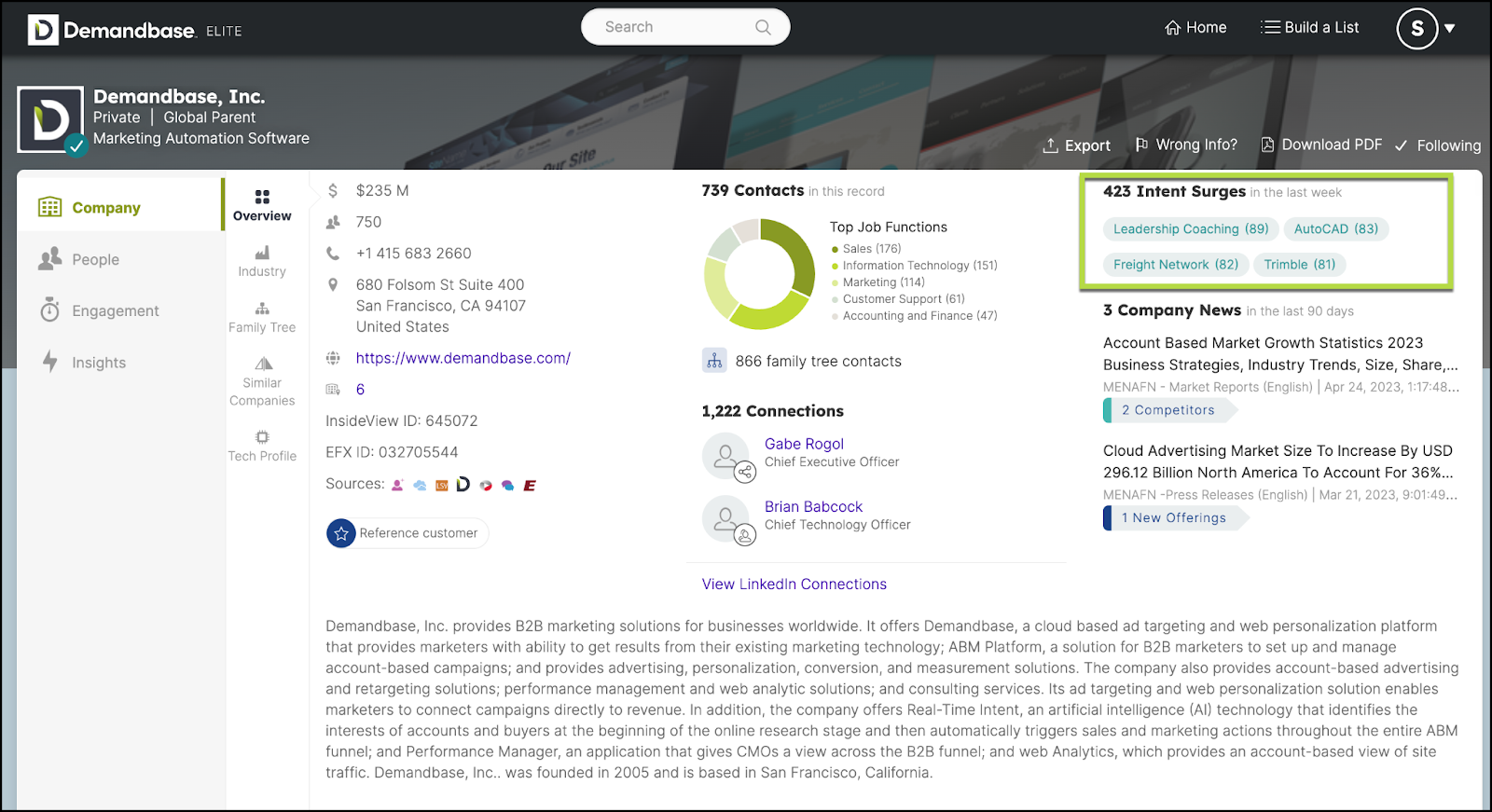

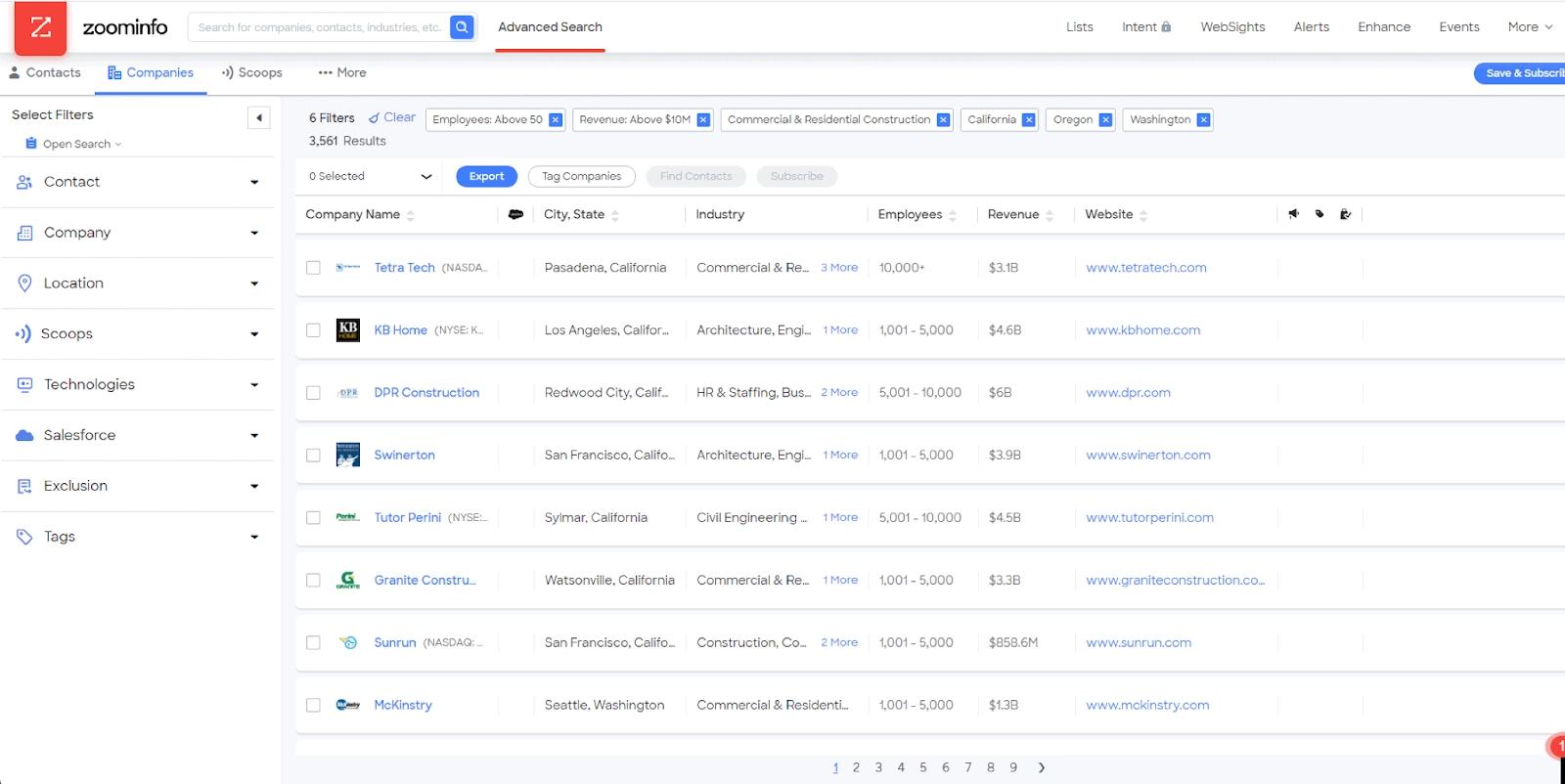

Our approach uses multi-vendor consensus:

- Query Clearbit, ZoomInfo, PDL, Demandbase for the same entity

- Compare returned data across vendors

- Accept matches where 2+ vendors agree

- Flag conflicts for human review

This achieves ~90% accuracy on identity resolution. Good enough for AI to operate autonomously while flagging edge cases.

The graph contains: Core Entities:

- Company: Firmographics, technographics, ICP scoring, engagement history

- Person: Contact data, role, seniority, social presence, communication preferences

- Employment: Links people to companies with temporal awareness (current vs. past roles)

- Deal: Opportunities with stages, buying committee, activity timeline

- Activity: Every touchpoint across every channel, linked to entities

The magic is in relationships:

- Person A works at Company B

- Person A is champion on Deal C

- Person A previously worked at Company D (which is your customer)

- Company B competes with Company E

This relationship-first structure is what enables person-based signals to actually drive intelligent action.

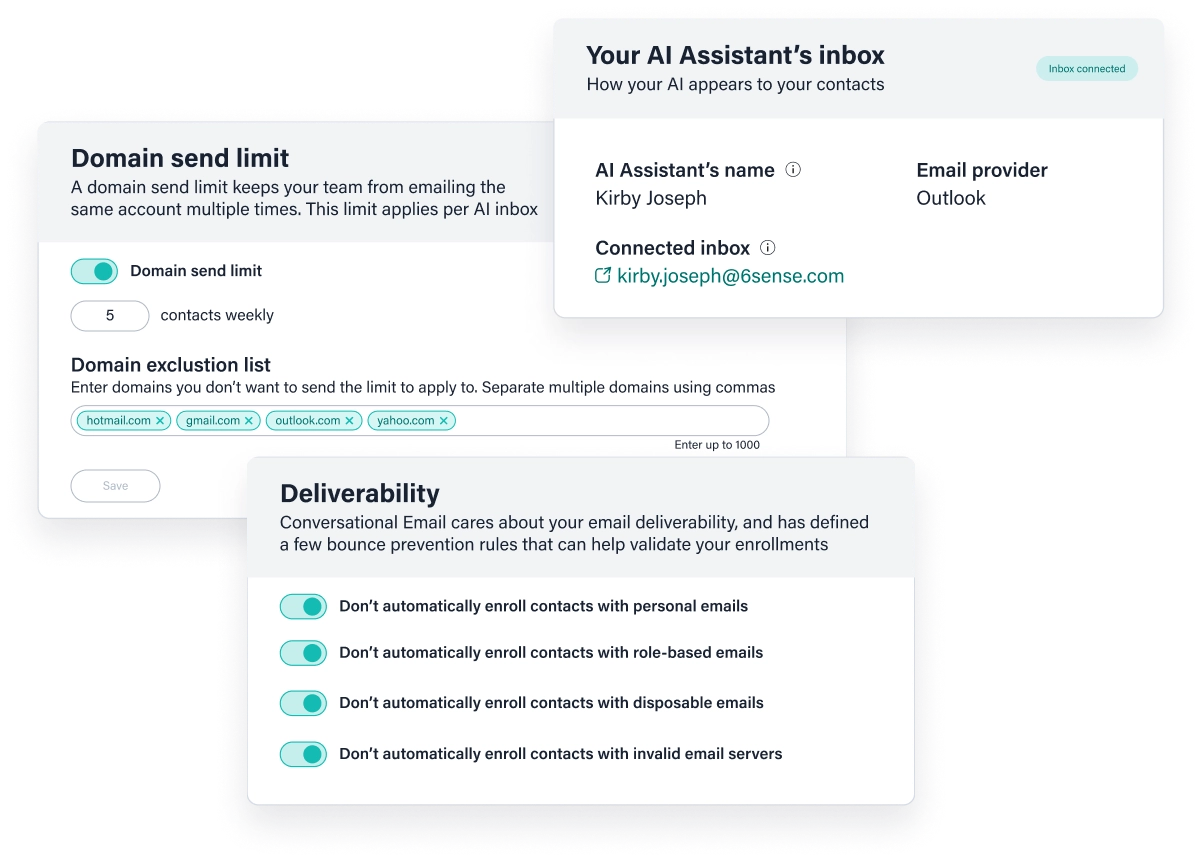

Layer 2: The Decision Ledger (Audit Trail for AI)

An AI audit trail documents what the agent did, when, why, and with what data. This isn't just nice for debugging. It's increasingly required for compliance and trust.

The EU AI Act mandates that high-risk AI systems maintain decision logs for oversight. The FINOS AI Governance Framework recommends implementing "Chain of Thought" logging that allows a human reviewer to step through the agent's decision-making process.

For GTM specifically, audit trails answer the questions your leadership will ask:

- "Why did the AI send that message to the CEO of our target account?"

- "What information did the system have when it made that routing decision?"

- "Did this outreach sequence actually contribute to the deal that closed?"

Every decision the system makes gets logged immutably:

Decision Record:

timestamp: 2026-01-15T10:30:00Z

decision_type: channel_selection

entity: person:uuid-123

context_snapshot: { full entity state at decision time }

decision: linkedin_message

reasoning: "High LinkedIn engagement, email bounced previously,

similar personas responded 40% better to LinkedIn"

policy_version: v2.3.1

outcome: null // Filled when we observe result

The key insight: Audit trails turn AI from a "black box" into a "glass box" where every insight has a traceable lineage. When a discrepancy arises, you can trace it back to the exact step where the logic diverged.

Three months later, when we know whether this outreach contributed to a closed deal, we update the outcome field. Now we have labeled training data for improving the system. This creates a closed loop between decisions and outcomes that enables continuous improvement.

Layer 3: The Policy Engine

Policies sit between raw AI capabilities and production execution. They encode:

- Business rules (ICP definitions, territory assignments)

- Compliance constraints (touch frequency limits, opt-out handling)

- Learned preferences (channel selection by persona, timing by seniority)

Policies are versioned like code. When outcomes show something isn't working, you update the policy and track exactly what changed.

Example policy evolution:

- v1.0: "Always email first, then LinkedIn"

- v2.0: "Email first for Directors, LinkedIn first for VPs" (learned from 6 months of outcomes)

- v2.1: "LinkedIn first for VPs, except on Mondays" (learned from engagement data)

Layer 4: Computed Columns (Token Efficiency)

Here's something most people miss: raw data is too expensive for LLMs.

If you send an AI agent the full activity history for a company (1,000+ events), you're burning tokens and getting worse decisions. The model gets lost in noise.

Solution: pre-compute meaningful summaries.

Instead of:

activities: [1000 raw page view events...]

The context graph provides:

`engagement_score: 85

buying_stage: evaluation

last_pricing_view: 2 days ago

sessions_30d: 12

key_pages: [pricing, vs-competitor, case-studies]

engagement_trend: increasing

champion_identified: true

The AI gets meaning without noise. This reduces token consumption by 10-100x while actually improving decision quality.

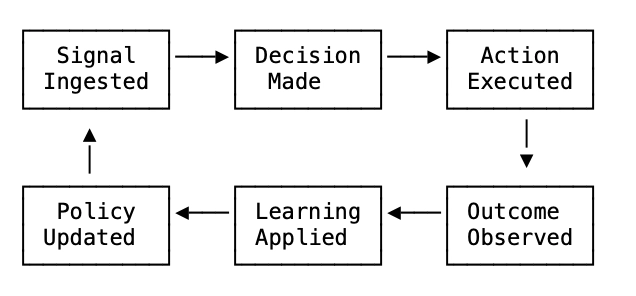

Layer 5: The Learning Loop

This is where long horizon pays off:

`Signal Ingested → Decision Made → Action Executed → Outcome Observed → Learning Applied → Policy Updated

Each step is logged. When outcomes arrive (reply received, meeting booked, deal closed), they're connected back to the decisions that preceded them.

Over quarters, the system learns:

- Which channels work for which personas

- What timing patterns drive responses

- Which message angles resonate with specific ICPs

- When to escalate to humans vs. proceed autonomously

This isn't fine-tuning the model. It's improving the policies the model operates under. Much more practical and controllable.

Use Cases by Time Horizon

Not every GTM motion needs long horizon agents. Here's how to think about it:

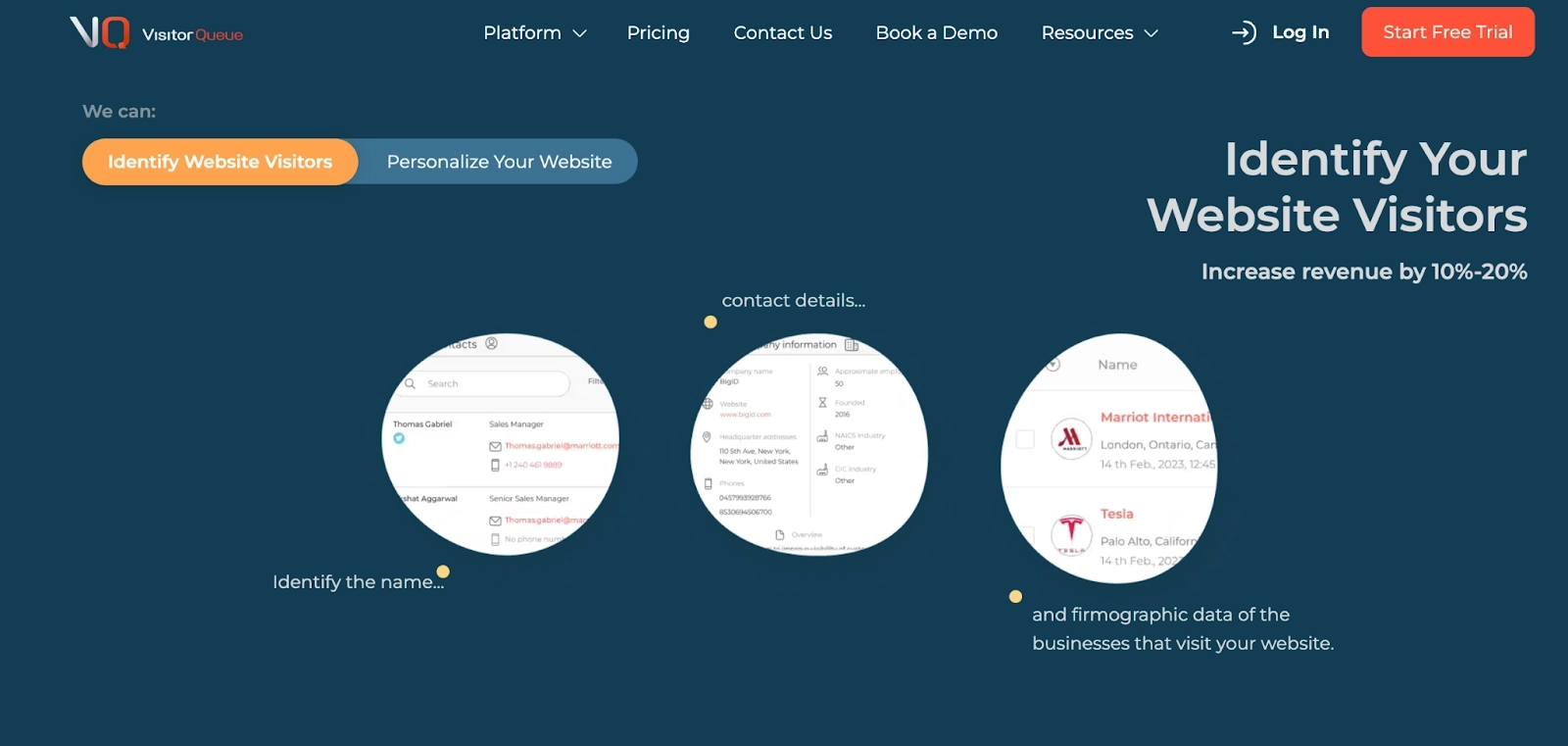

7-Day Horizon: Tactical Response

Use case: Responding to high-intent website visitors

What matters: Speed, relevance, basic personalization

Architecture needs: Real-time signals, basic enrichment, fast execution

For this, traditional AI agentic workflows work fine. Someone hits your pricing page, you want to engage quickly. A short horizon agent can handle this.

Tools that work: Most AI SDR platforms, basic automation

30-Day Horizon: Campaign Execution

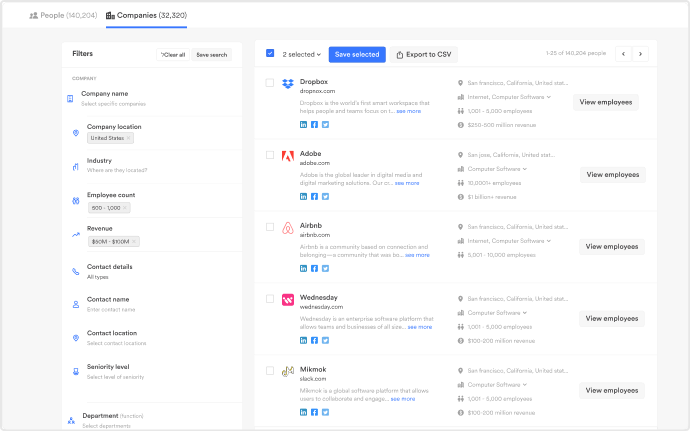

Use case: Running outbound sequences to target accounts

What matters: Message variation, response handling, sequence optimization

Architecture needs: Contact-level memory, A/B testing, basic outcome tracking

This is where most "AI SDR" tools live. They can run a 4-week sequence without embarrassing repetition. But they struggle with anything longer.

Limitation: If the prospect doesn't respond in 30 days, the system forgets them. When they return 60 days later showing high intent, it starts over.

90-Day Horizon: Deal Acceleration

Use case: Supporting opportunities through the sales cycle

What matters: Buying committee tracking, multi-stakeholder coordination, deal intelligence

Architecture needs: Entity relationships, decision traces, cross-channel coordination

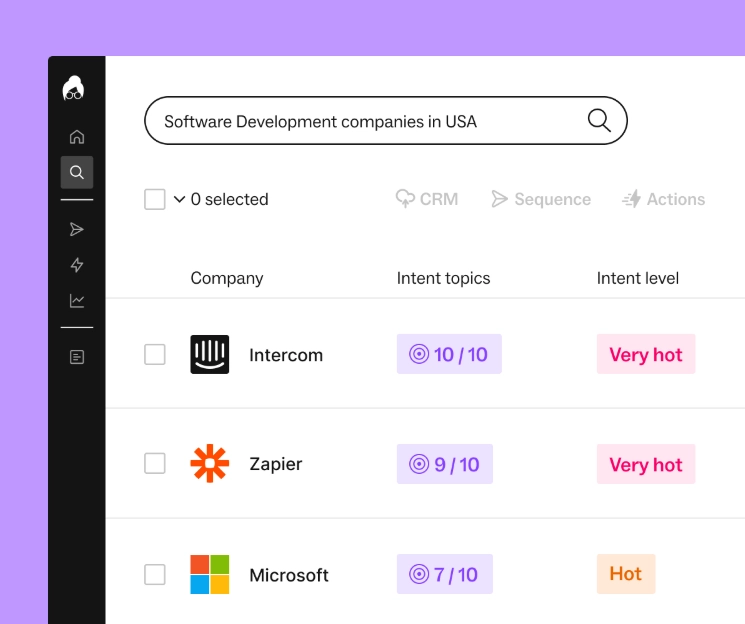

This is where long horizon agents shine. The system knows:

- Who's in the buying committee and their roles

- What each stakeholder has seen and responded to

- Which objections have been raised and addressed

- When the deal is at risk based on engagement patterns

Requirement: Context Graph + Decision Ledger architecture

180-Day+ Horizon: Strategic ABM

Use case: Long-term account development, expansion plays, re-engagement

What matters: Relationship continuity, organizational memory, outcome attribution

Architecture needs: Full long horizon architecture with policy evolution

Enterprise deals and expansion motions require AI that thinks in quarters. The champion you cultivated last year might change jobs. The deal you lost might be winnable when their contract renews. The pattern that worked for similar accounts should inform new approaches.

This level requires the full stack: Context Graph, Decision Ledger, Policy Engine, and Learning Loop.

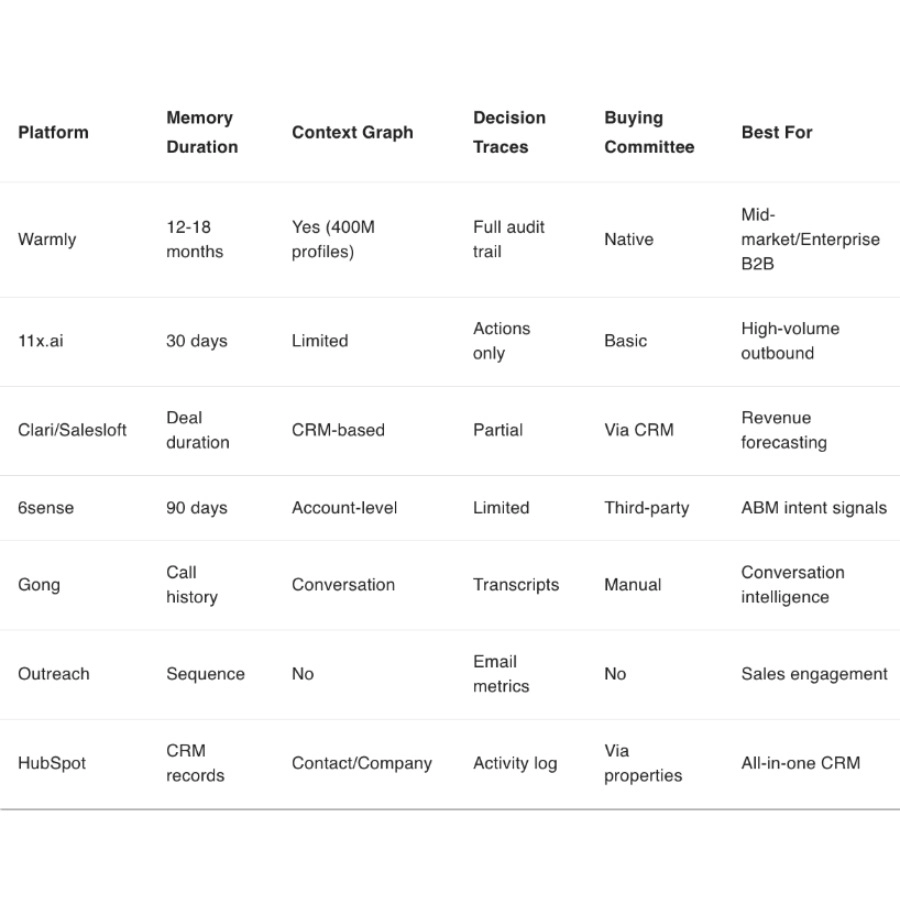

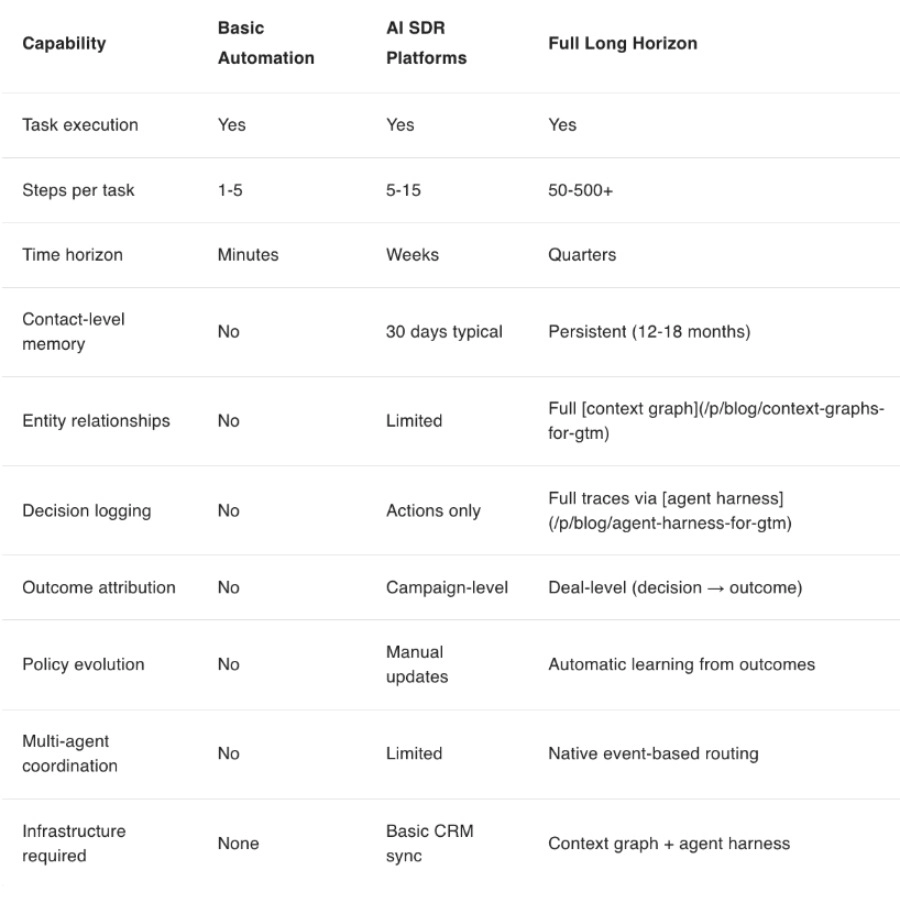

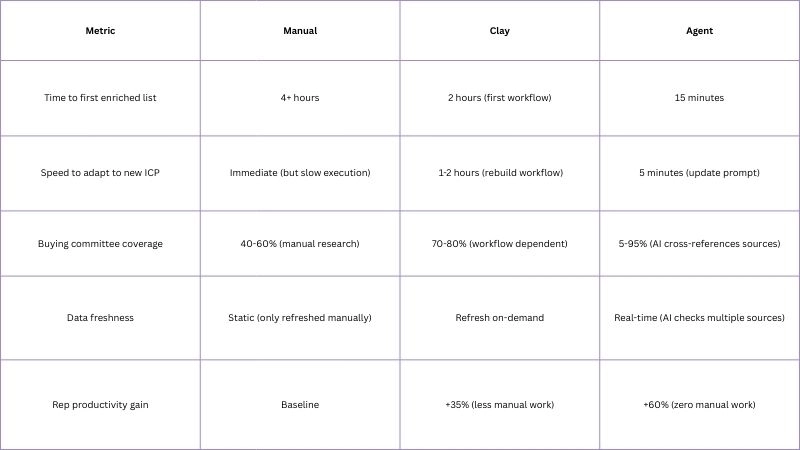

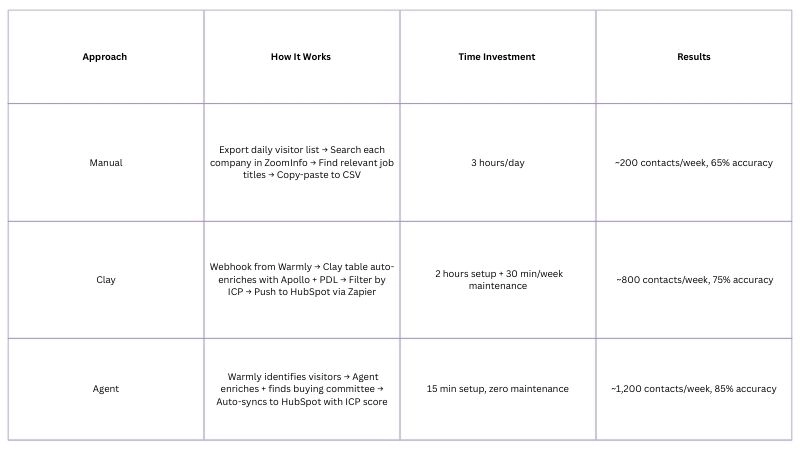

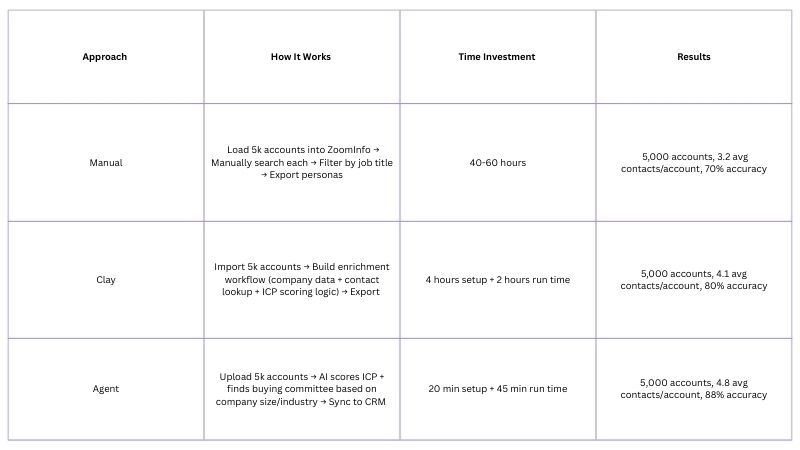

Implementation Comparison: Long Horizon Capabilities

Here's an honest assessment of how different approaches stack up:

Where Traditional Tools Work

If your sales cycle is under 14 days and you're optimizing for volume, you don't need long horizon complexity. Agentic automation at the task level is sufficient.

Tools like basic Outreach/Salesloft sequences, simple AI email writers, and standard marketing automation handle this fine.

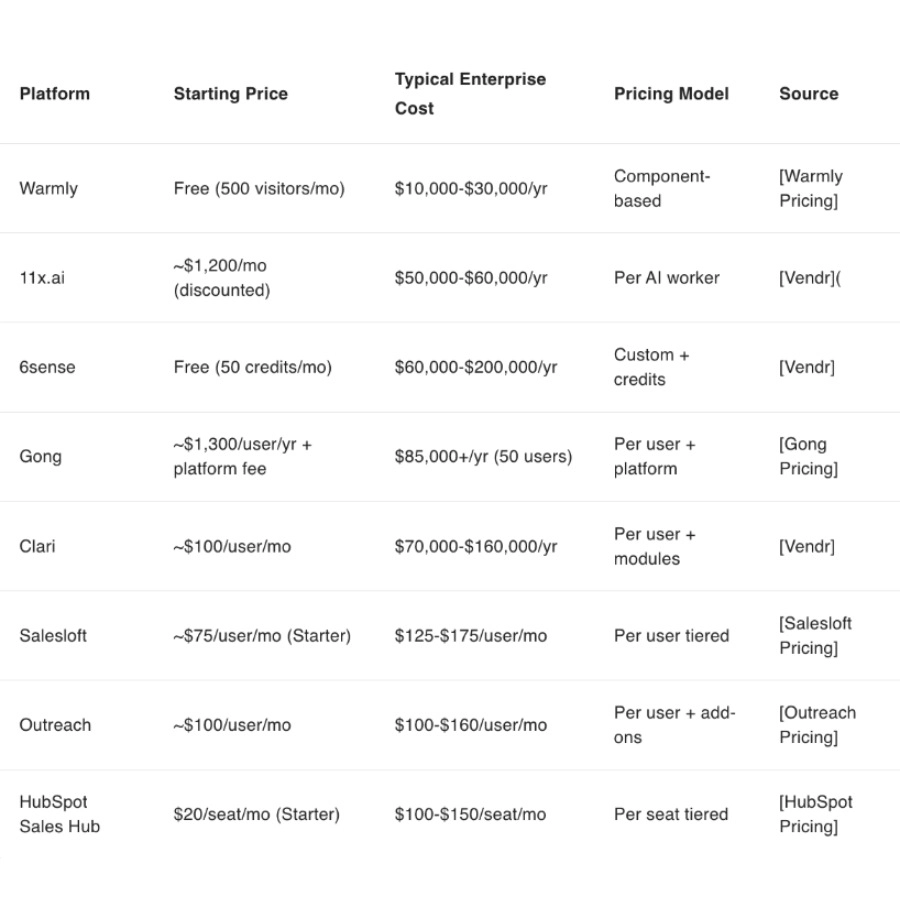

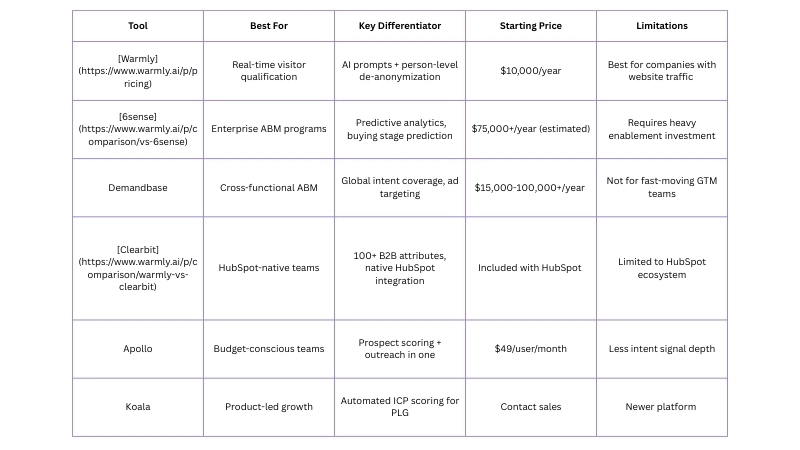

Long Horizon Platform Comparison (2026)

Reading the table:

- Memory Duration: How long does context persist for a specific contact?

- Context Graph: Does the system model entity relationships beyond flat records?

- Decision Traces: Can you see why the AI made a specific decision?

- Buying Committee: Does the system understand multi-stakeholder deals?

Where Long Horizon Is Required

- Enterprise sales (60+ day cycles)

- ABM programs targeting specific accounts over time

- Expansion revenue requiring relationship continuity

- Any motion where you need to know "what actually worked?"

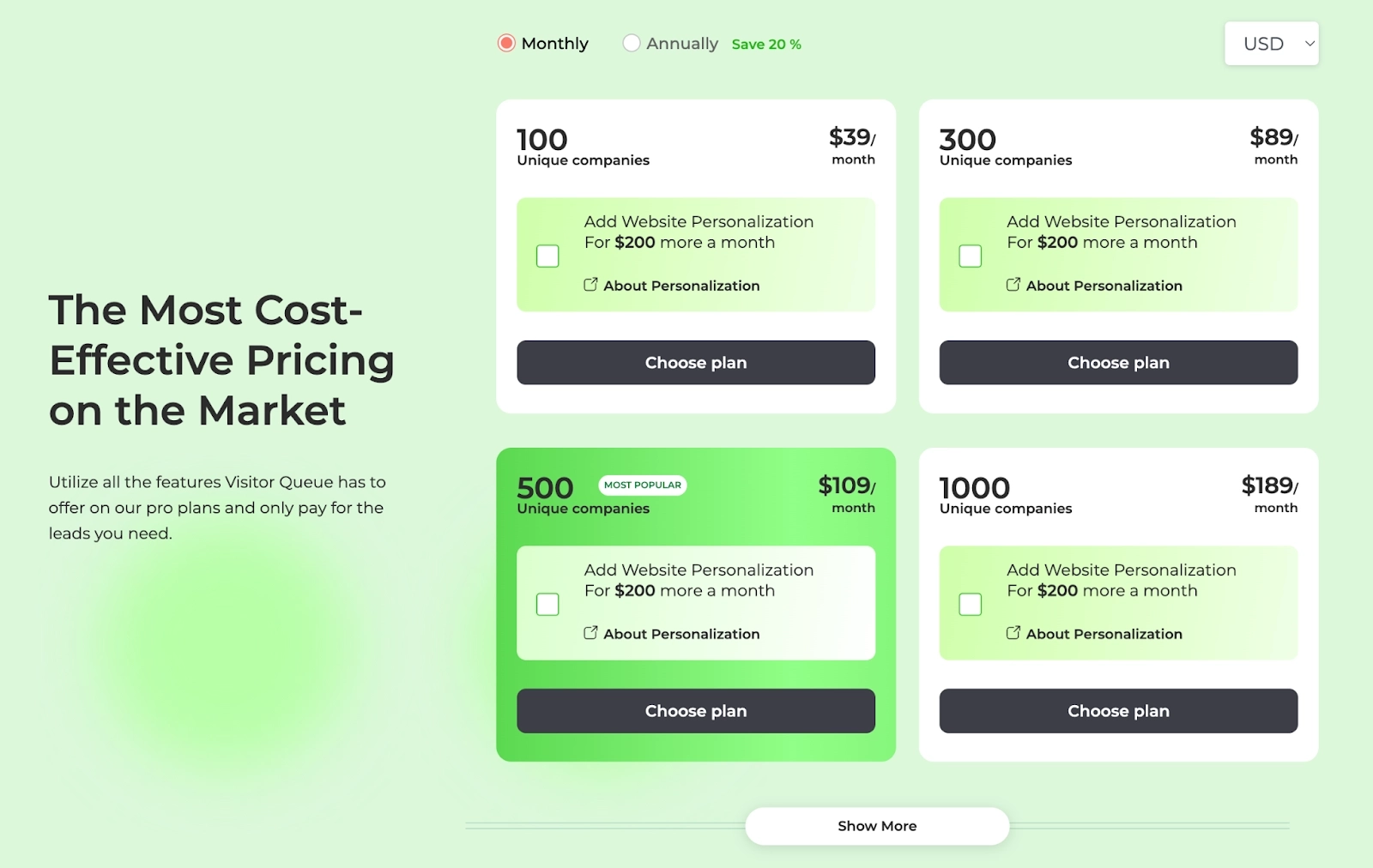

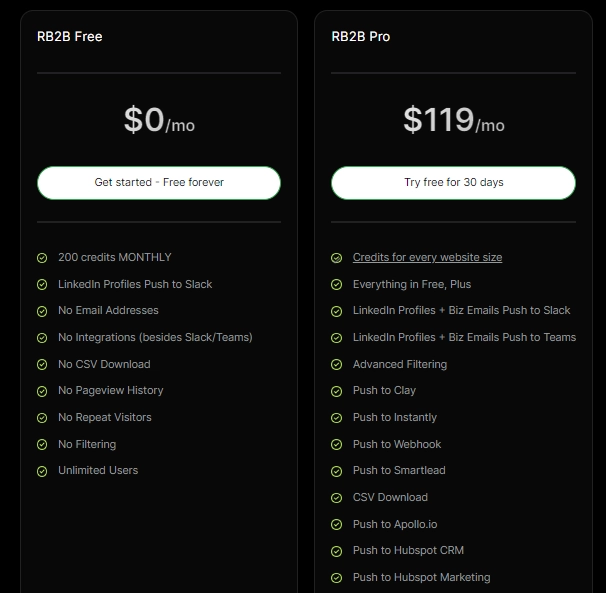

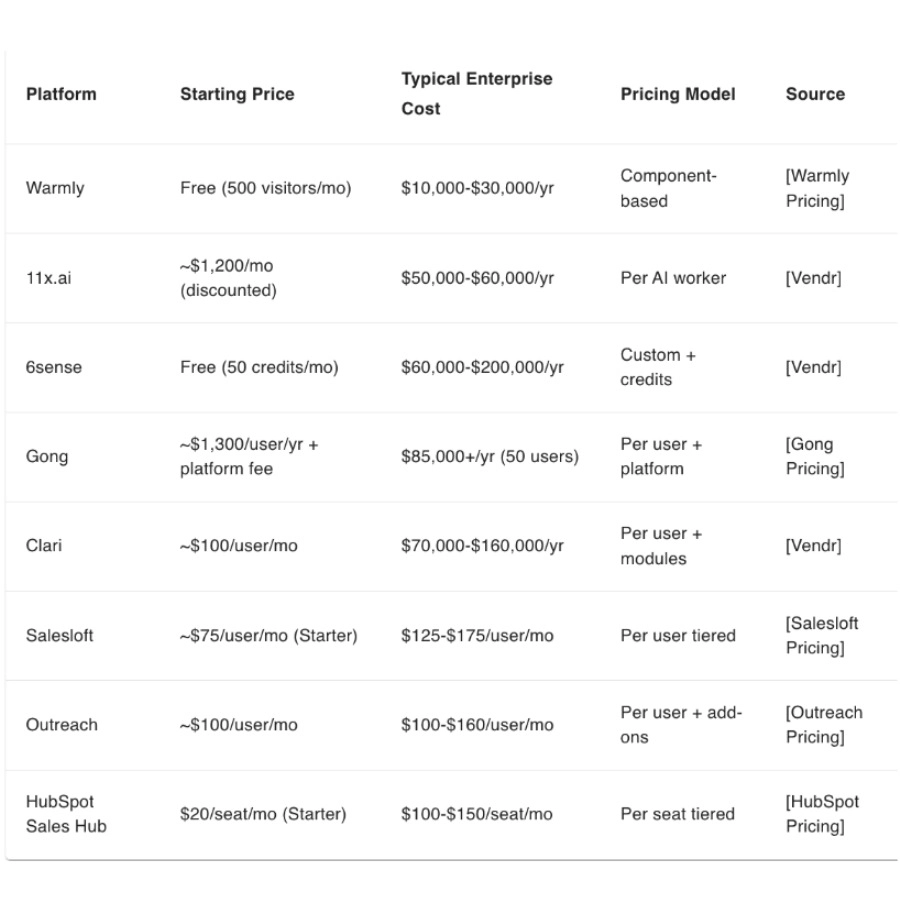

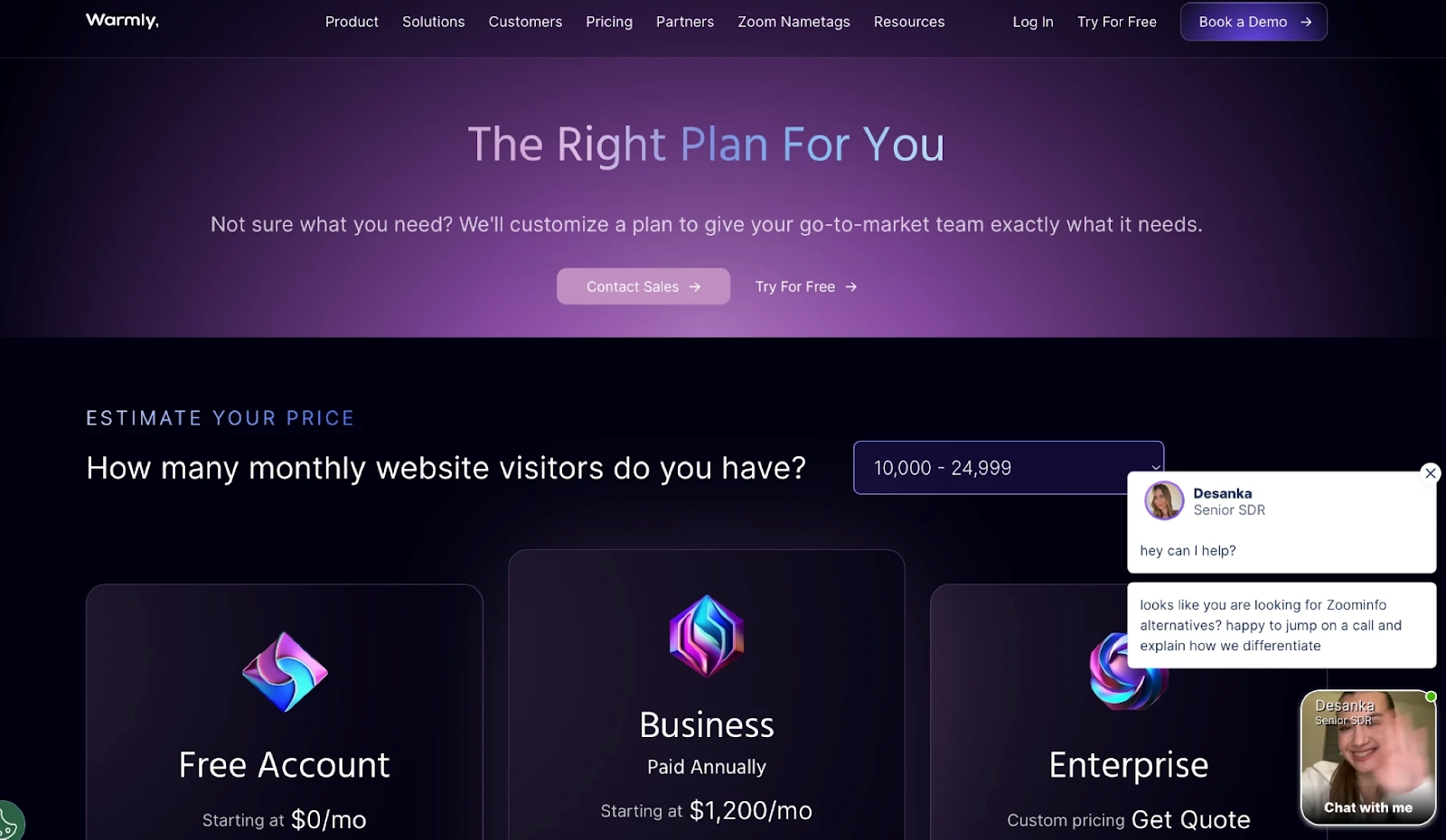

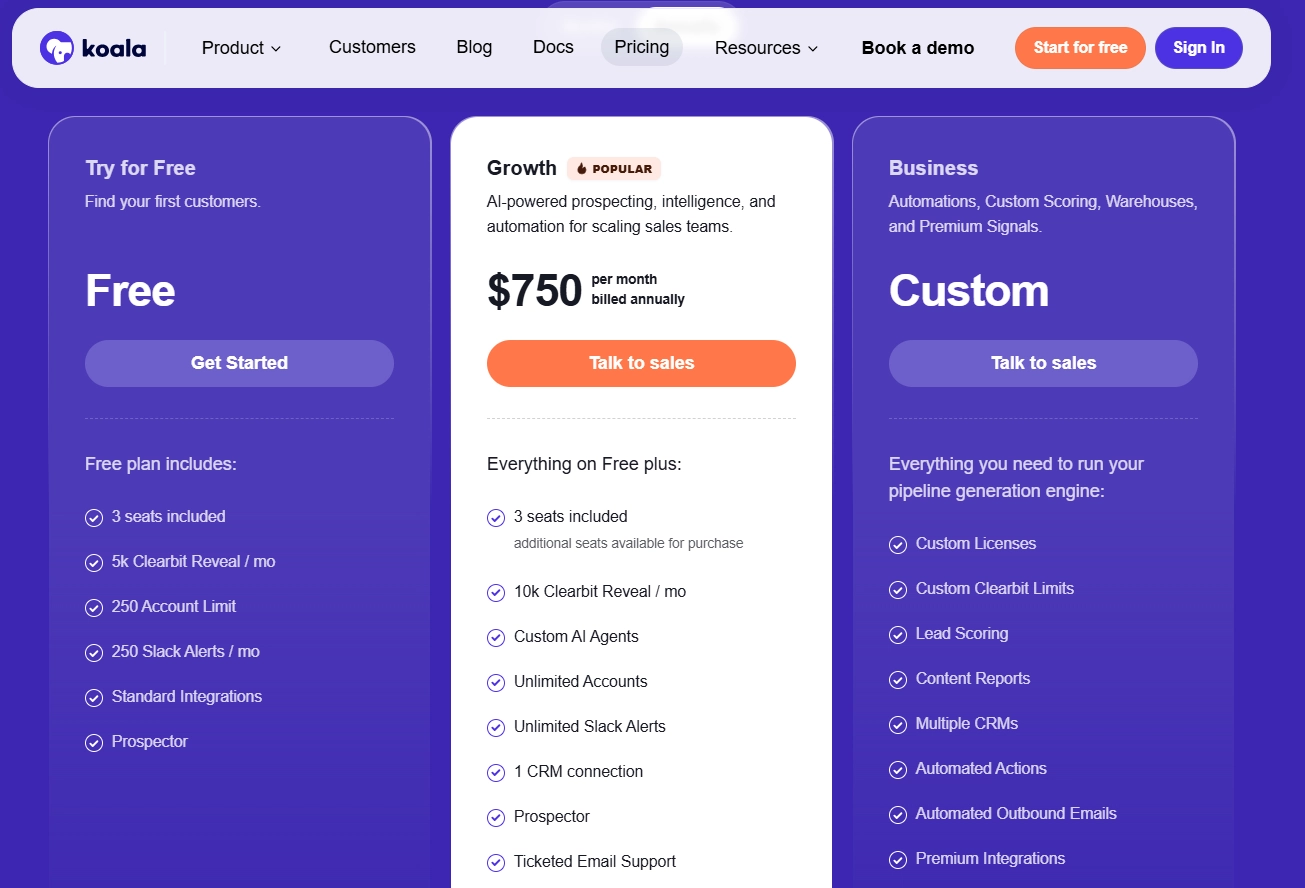

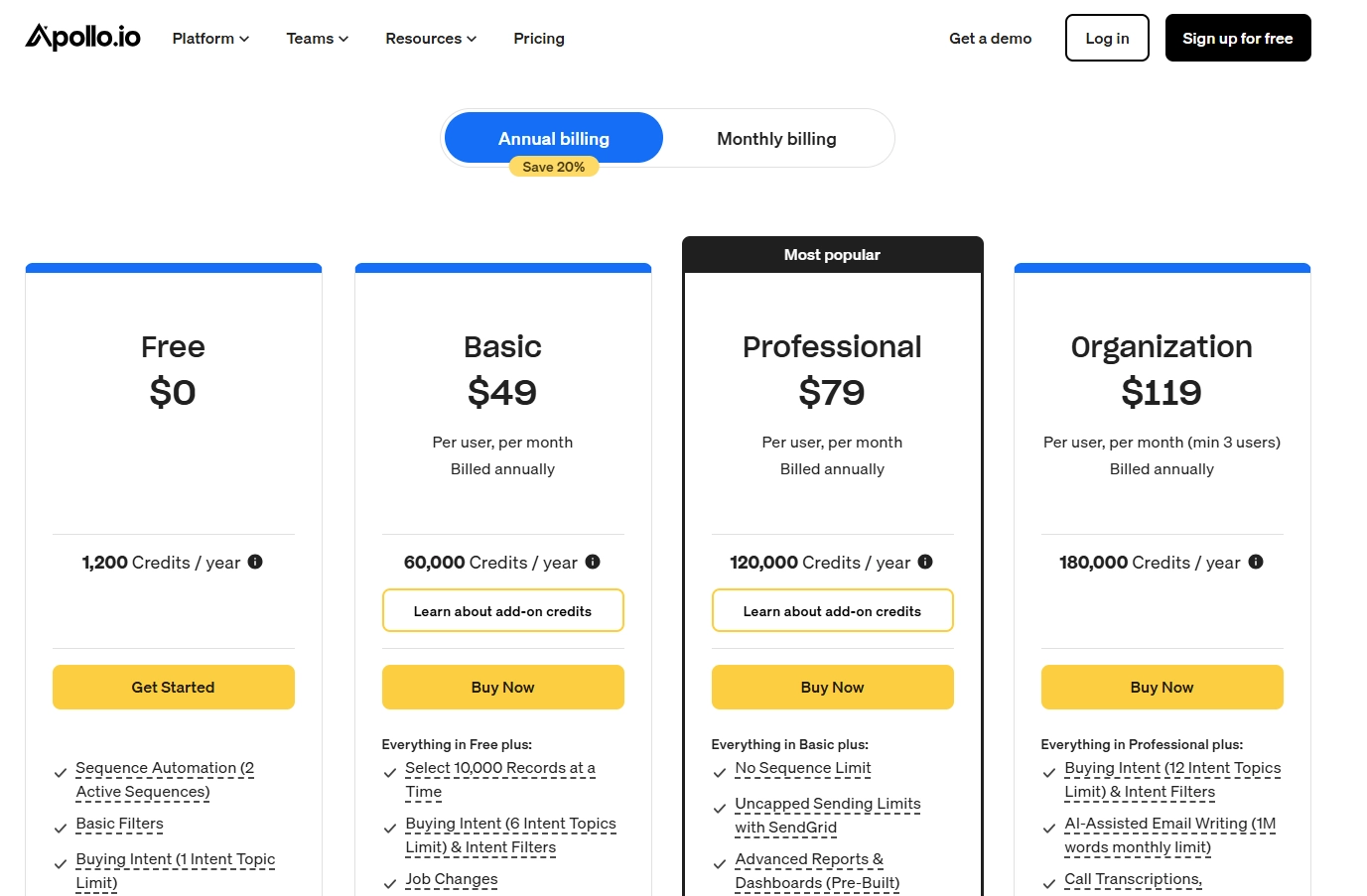

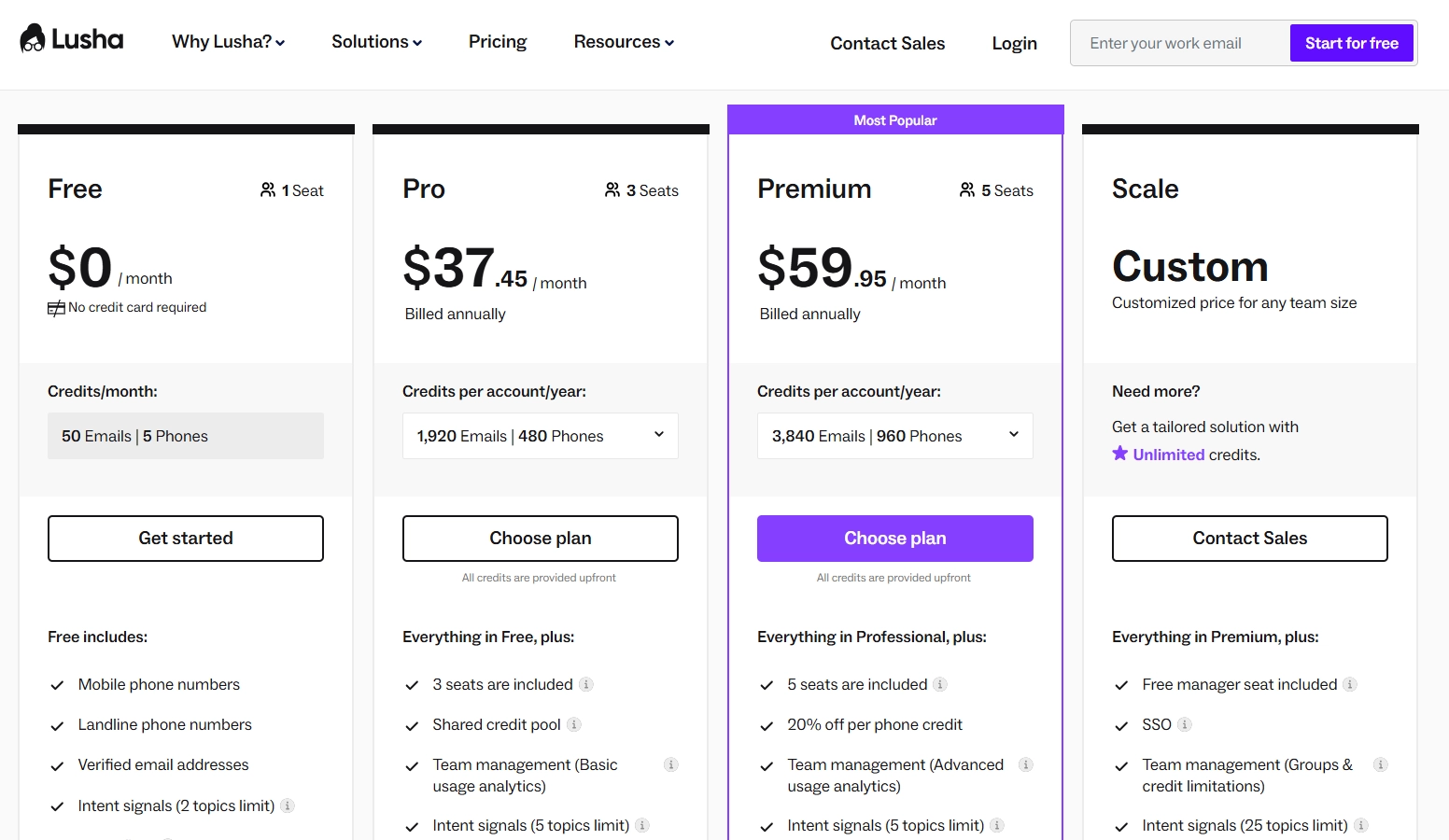

Pricing Comparison: Long Horizon Platforms (2026)

Pricing Details by Platform

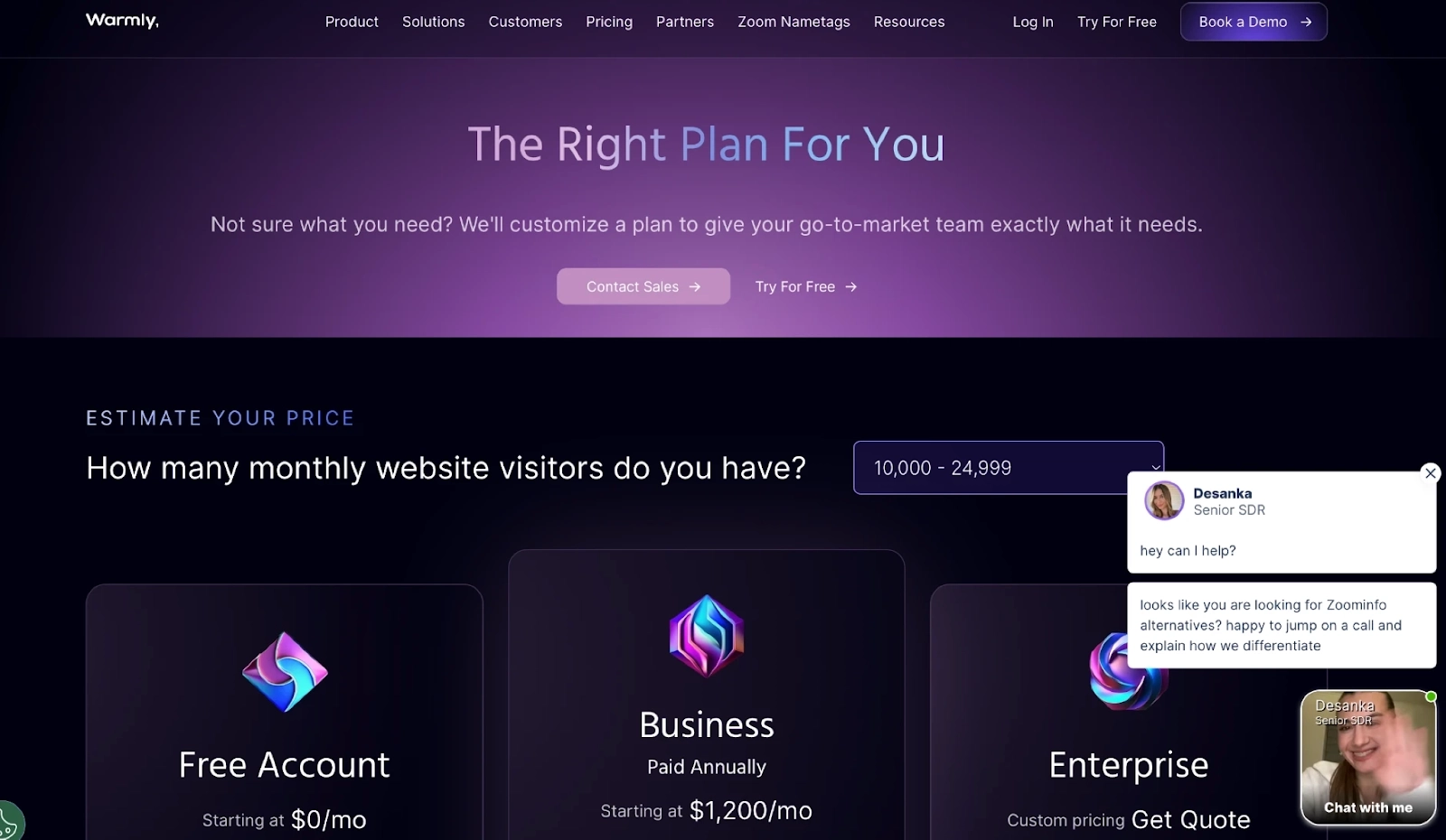

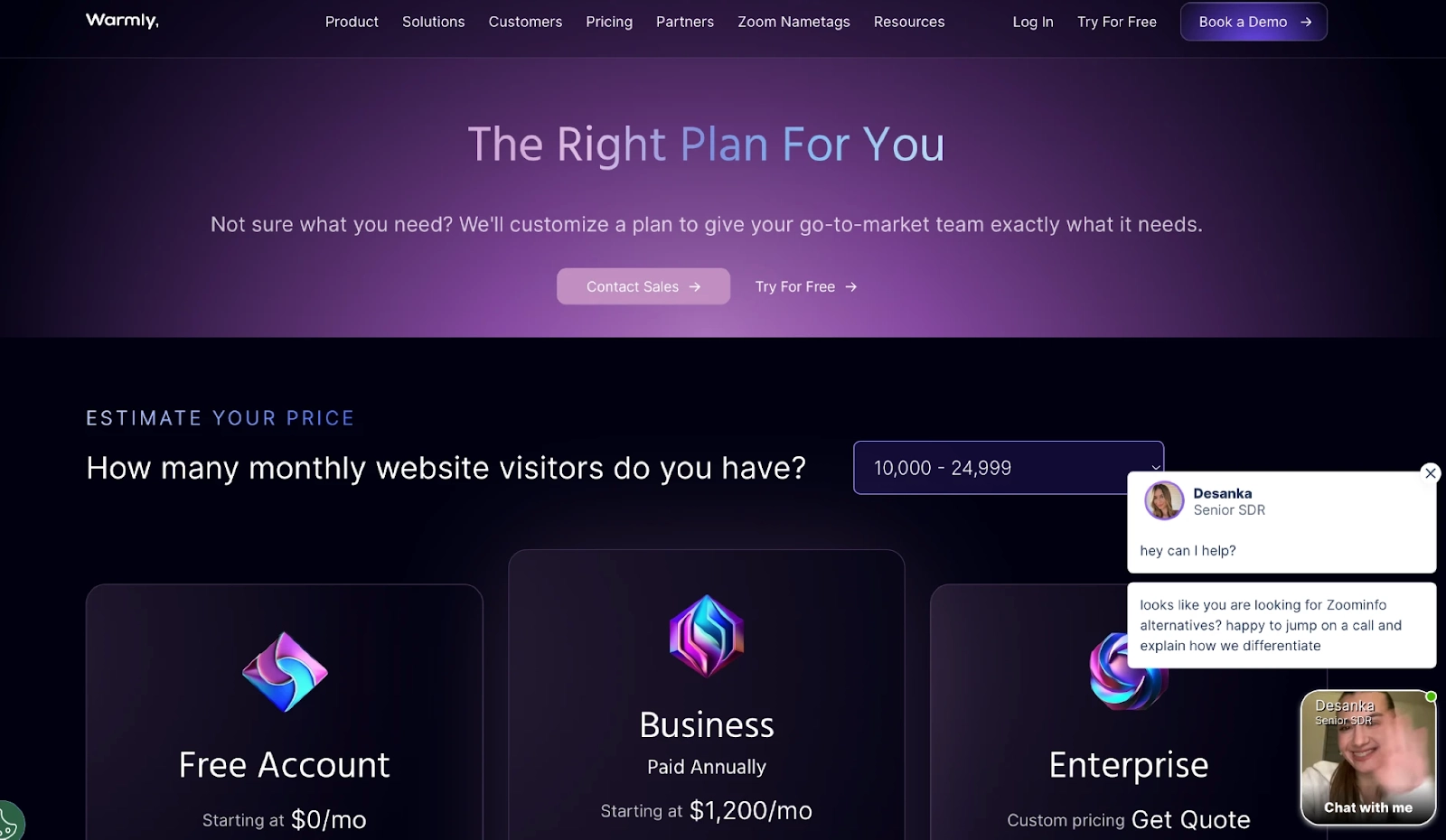

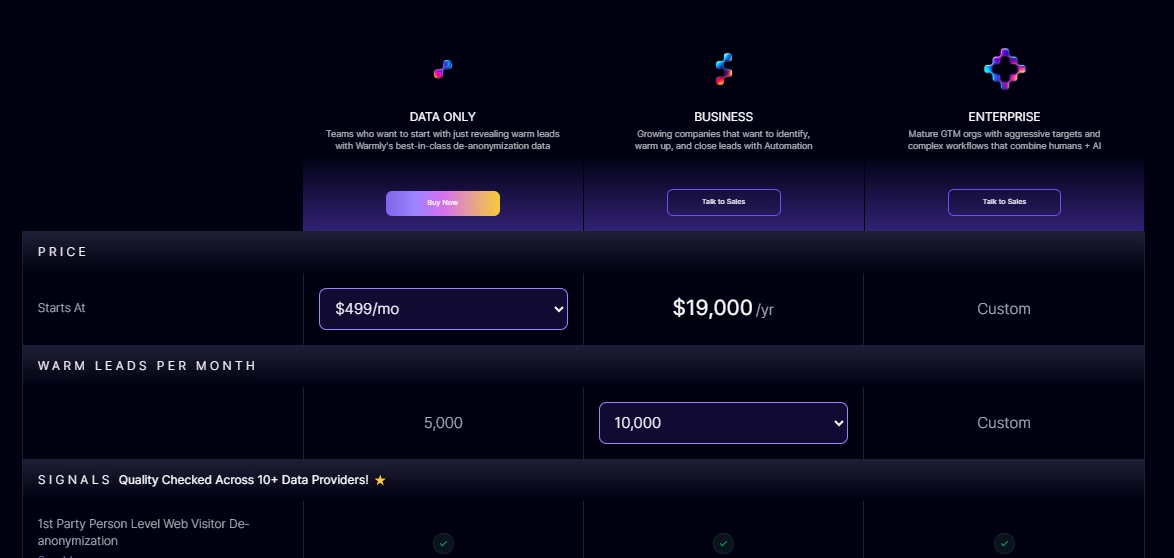

Warmly offers a modular approach with a free tier (500 visitors/month). Paid plans scale by capability: AI Data Agent starts at $10,000/yr, AI Inbound Agent at $16,000/yr, AI Outbound Agent at $22,000/yr, and Marketing Ops Agent at $25,000/yr. View pricing

11x.ai doesn't publish pricing publicly. Third-party sources report costs ranging from $1,200/month (with discounts) to $5,000/month depending on features and commitment. Annual contracts are typically required. Vendr data

6sense uses custom enterprise pricing. According to Vendr, the median buyer pays $55,211/year, with costs ranging up to $130,000+/year for full enterprise access. Implementation fees add $5,000-$50,000 depending on complexity.

Gong charges a platform fee ($5,000-$50,000/year) plus per-user costs ($1,300-$1,600/user/year). A 50-user deployment typically costs $85,000+ annually before onboarding fees ($7,500). Gong pricing page

Clari (now merged with Salesloft) offers modular pricing: Core forecasting runs ~$100-125/user/month, Copilot conversation intelligence adds ~$100/user/month. Full-featured deployments reach $200-310/user/month. Vendr data

Salesloft offers tiered pricing: Standard ($75/user/month), Professional ($125/user/month), and Advanced ($175/user/month). Volume discounts of 33-45% are available at 25+ users. Salesloft pricing page

Outreach pricing isn't publicly listed but industry estimates place it at $100-160/user/month. Enterprise deployments (200+ users) can negotiate 9-55% discounts on multi-year contracts. Outreach pricing page

HubSpot Sales Hub has transparent pricing: Starter at $20/seat/month, Professional at $100/seat/month (+ $1,500 onboarding), Enterprise at $150/seat/month (+ $3,500 onboarding, annual commitment required). HubSpot pricing page

Hidden Costs to Watch

Beyond subscription fees, budget for:

- Implementation: $5,000-$75,000 depending on complexity and vendor

- Training: $300-$500/user for certification programs

- Integrations: Custom integrations can add $10,000-$50,000

- Overages: Credit-based systems (6sense, data enrichment) charge for usage beyond limits

- Renewal increases: Many contracts include automatic price increases (negotiate caps)

Negotiation Tips

Based on Vendr transaction data and user reports:

- End-of-quarter timing can yield 20-40% discounts

- Multi-year commitments unlock 8-15% additional savings

- Bundling multiple products improves per-user pricing

- Competing bids create leverage (vendors know when you're evaluating alternatives)

Warmly's Approach

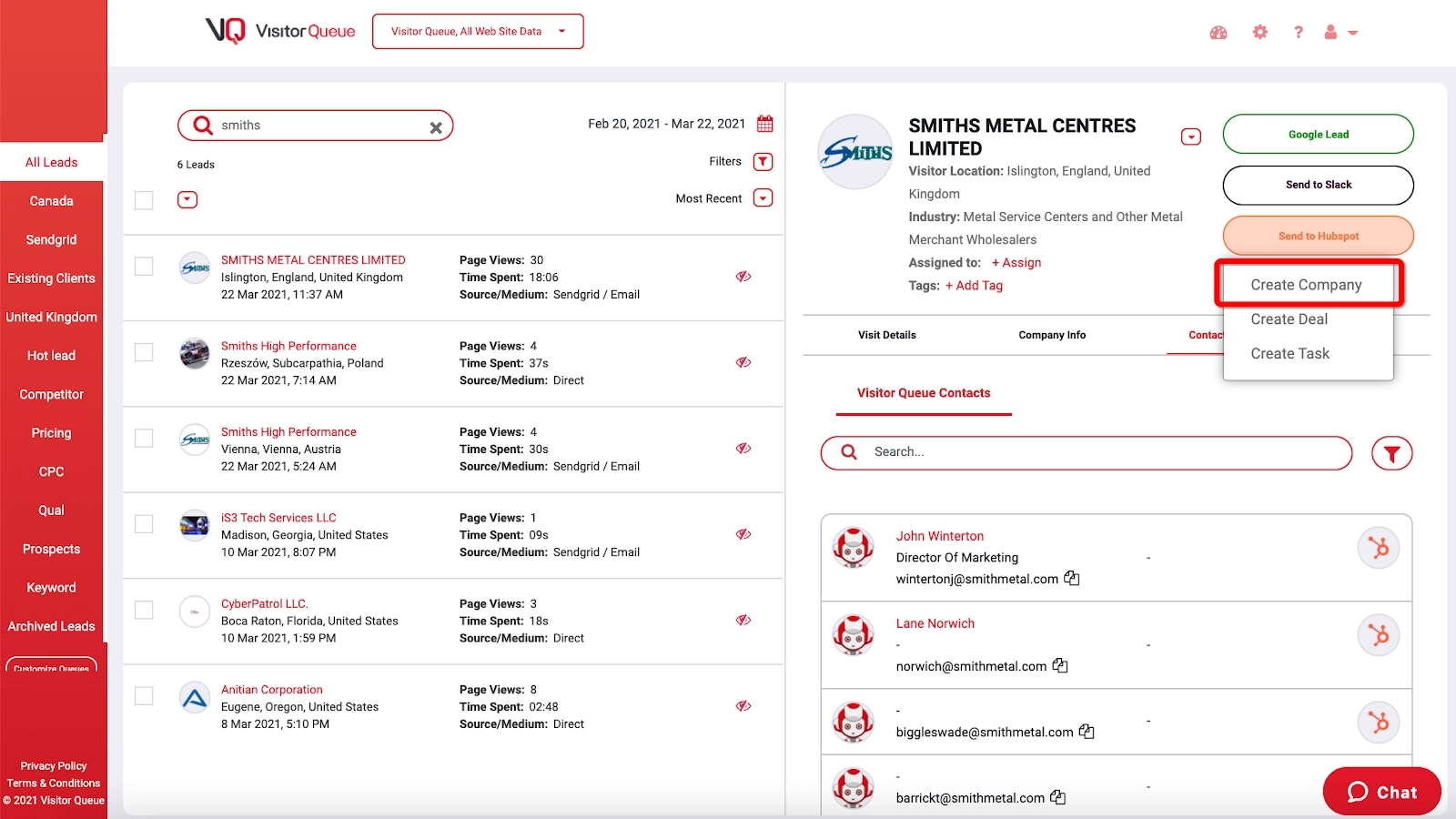

We built long horizon architecture because our customers sell to enterprises with multi-stakeholder buying committees. The AI inbound agent needs to know that the visitor today was nurtured by the AI marketing ops agent last month.

Our system maintains:

- 400M+ person profiles with multi-vendor consensus

- Entity relationships across companies, people, and deals

- Decision traces for every AI action

- Outcome attribution from touch to close

We're not the right fit if you need high-volume, low-touch automation. We're built for teams where context compounds.

How to Evaluate Long Horizon Capabilities

If you're evaluating AI GTM tools, here are the questions that separate genuine long horizon systems from marketing claims:

1. "How long do you retain context for a specific contact?"

Bad answer: "We personalize based on recent activity"

Good answer: "We maintain full entity history with computed summaries, typically 12-18 months of context"

2. "Can you show me the decision trace for a specific action?"

Bad answer: "We log all actions in an activity feed"

Good answer: "Here's the exact context, policy version, and reasoning that led to this decision, plus the outcome when we observed it"

3. "How do you handle the same person across multiple systems?"

Bad answer: "We sync with your CRM"

Good answer: "We run multi-vendor identity resolution with consensus scoring, achieving ~90% accuracy on entity matching"

4. "How does the system improve over time?"

Bad answer: "We use the latest AI models"

Good answer: "We track decision-to-outcome attribution and update policies based on what actually drives revenue"

5. "How do you prevent duplicate or conflicting touches?"

Bad answer: "We have suppression lists"

Good answer: "Multi-agent coordination with shared context means agents know what others have done and planned"

The Honest Limitations

Long horizon agents aren't magic. Here's where they struggle:

Data requirements are real. You need enough volume to learn patterns. If you close 5 deals a quarter, there's not enough signal to train on.

Complexity costs. Building and maintaining this architecture is harder than buying a simple tool. It's worth it for the right use cases, overkill for others.

Cold start problem. The system gets smarter over quarters. Month one won't be dramatically better than simpler tools.

Integration overhead. To maintain entity relationships, you need to connect data sources. The more fragmented your stack, the harder this is.

If your sales cycle is under 14 days, your deal volume is low, or you're not ready to invest in data infrastructure, start with simpler AI sales automation and grow into long horizon as you scale.

Frequently Asked Questions

What are long horizon agents for GTM?

Long horizon agents are AI systems designed to maintain context, track decisions, and learn from outcomes over extended time periods (weeks to quarters) rather than executing isolated tasks. Unlike traditional automation that "forgets" after each interaction, long horizon agents build a persistent world model of entities (companies, people, deals) and their relationships. This enables them to coordinate multi-channel engagement across buying committees and improve based on what actually closes deals, not just what gets clicks.

What's the difference between an AI SDR and a long horizon agent?

AI SDRs typically operate on a task-level with short memory: send sequence, track replies, update CRM. They optimize for email opens and response rates. Long horizon agents operate on a goal-level with persistent memory: they understand buying committees, coordinate with other agents (marketing, CS), track outcomes over months, and optimize for closed revenue. An AI SDR might send the same sequence to someone who already talked to your AE last month. A long horizon agent knows to coordinate.

How do AI agents learn from sales outcomes?

Through a Decision Ledger architecture. Every decision is logged with: what was decided, what context existed, what policy triggered it, and what outcome resulted. When a deal closes (or doesn't), that outcome is attributed back to the decisions that preceded it. Over time, patterns emerge: "LinkedIn outreach to VPs at high-intent accounts with previous website engagement closes 40% better than cold email." These patterns update the policies that govern future decisions.

Which GTM AI tools have persistent memory?

Most don't, or have limited memory (30-day contact history). Tools with genuine persistent memory typically have: (1) A graph database or equivalent for entity relationships, (2) Identity resolution across data sources, (3) Immutable decision logging, (4) Explicit outcome attribution. Ask vendors specifically about retention periods and entity relationship modeling. If they talk about "recent activity" rather than "entity history," they're short horizon.

How do you implement AI agents that track buyer journeys over time?

The core architecture requires: (1) Context Graph connecting companies, people, deals, and activities with relationships, (2) Identity resolution to know that John from the website is the same John in your CRM and LinkedIn, (3) Decision Ledger logging every AI decision with context, (4) Outcome attribution connecting closed deals back to the touches that contributed, (5) Policy engine that updates based on observed patterns. You can start with PostgreSQL and grow into specialized infrastructure as you scale.

Are long horizon AI agents worth the complexity?

Yes if: Your sales cycle exceeds 30 days, you're running ABM motions, you have multiple agents/channels to coordinate, you care about understanding what actually drives revenue. No if: Your sales cycle is under 14 days, you're optimizing for volume over precision, you don't have the data infrastructure to feed a persistent context layer, you're early stage with limited deal volume to learn from.

How do long horizon agents handle buying committee changes?

This is where they excel. The Context Graph tracks employment relationships with temporal awareness. When a champion changes jobs (detected via LinkedIn monitoring or data vendor updates), the system knows: (1) The champion left, (2) Their replacement needs to be identified and engaged, (3) The former champion is now at a new company (potential new opportunity), (4) The deal risk increased (alert the AE). Short horizon systems just see "contact no longer at company" and stop.

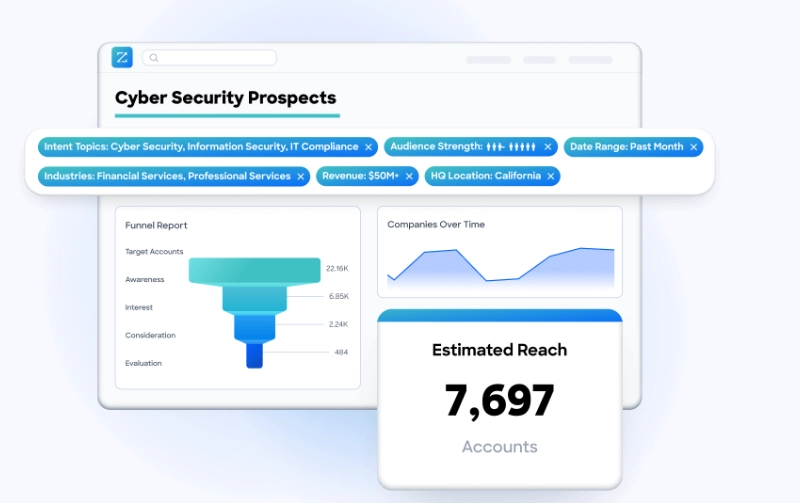

What data sources feed long horizon GTM agents?

Comprehensive long horizon systems ingest: First-party signals (website visits, chat, form fills), second-party signals (social engagement, community), third-party signals (research intent from Bombora, firmographics from Clearbit/ZoomInfo), CRM data (deals, activities, historical relationships), and enrichment data (contact info, job changes, company news). The system's job is to unify these through identity resolution and maintain a coherent entity model over time.

What is a context graph for GTM?

A context graph is a unified data architecture that connects every entity in your go-to-market ecosystem (companies, people, deals, activities, outcomes) into a single queryable structure that AI agents can reason over. Unlike flat CRM records or data warehouses that store facts, context graphs store meaning: relationships, temporal changes, and decision traces. For GTM, this means knowing not just that "John visited your website" but that John works at Acme, reports to Sarah the CRO, is the champion on an active deal, previously worked at your customer BigCo, and has been increasingly engaged over the past 30 days.

What is AI agent memory and why does it matter for sales?

AI agent memory refers to a system's ability to store and recall past experiences to improve decision-making. Unlike traditional LLMs that process each task independently, AI agents with memory retain context across sessions. For sales specifically, this means: remembering previous conversations with a prospect, knowing their objections from 3 months ago, understanding their relationship to other stakeholders in the buying committee, and tracking how their engagement has evolved. Most AI SDRs have only short-term memory (within a session). Long horizon agents have true long-term memory that persists across quarters.

Do AI sales agents need audit trails?

Yes, increasingly so. An AI audit trail documents what the agent did, when, why, and with what data. This matters for: (1) Compliance: The EU AI Act mandates decision logs for high-risk AI systems, (2) Debugging: When something goes wrong, you need to understand why, (3) Trust: Leadership will ask why the AI made specific decisions about key accounts, (4) Learning: Connecting decisions to outcomes enables continuous improvement. Without audit trails, AI agents are black boxes. With them, you can explain any decision and improve based on what works.

What are the best AI tools for long enterprise B2B sales cycles?

For sales cycles over 90 days, you need tools that maintain context across quarters. Top platforms include: Warmly for buying committee tracking with context graph architecture, Clari/Salesloft for revenue intelligence and deal forecasting, 6sense for ABM intent data, Gong for conversation intelligence with deal insights. The key evaluation criteria: persistent memory (not just 30-day history), entity relationships (buying committee modeling), decision logging (audit trails), and outcome attribution (connecting touches to closed deals).

How do AI agents coordinate across sales and marketing channels?

Multi-agent coordination requires shared context. When multiple AI agents operate (SDR outbound, marketing nurture, AE follow-up), they need to know what others have done to avoid conflicts. Good coordination means: shared entity state (everyone sees the same account context), activity awareness (knowing what touches have happened), policy coordination (respecting frequency limits across channels), and outcome attribution (crediting the right touches). Without coordination, prospects get three messages in one day from the same company. With coordination, they get a coherent experience.

What's the difference between agentic AI and long horizon agents?

Agentic AI refers to autonomous AI that can plan, execute, and optimize tasks without constant human guidance. Long horizon agents are a specific type of agentic AI designed for extended time periods. The difference: most agentic AI operates on task-level (complete this email sequence), while long horizon agents operate on goal-level (close this deal over the next quarter). Long horizon agents require additional architecture: persistent memory, decision ledgers, outcome attribution, and policy evolution. All long horizon agents are agentic, but not all agentic AI is long horizon.

How do you measure ROI on long horizon AI agents?

ROI measurement requires connecting decisions to outcomes over extended periods. Key metrics: (1) Deal attribution: which AI touches contributed to closed revenue, (2) Cycle acceleration: are deals closing faster with AI assistance, (3) Coverage efficiency: how many accounts can one rep + AI handle vs. rep alone, (4) Quality metrics: reply rates, meeting rates, conversion rates by stage, (5) Learning rate: is the system improving over quarters. The challenge: outcomes take 90-180 days to materialize. You need patience and proper attribution to measure long horizon ROI accurately.

Building for the Long Game

The GTM tools that defined the last decade were built for a different era. Email blast platforms, basic sequences, simple lead scoring. They assumed humans would do the thinking and tools would do the executing.

AI changes that equation. But only if the AI can actually think across time.

Most "AI agents" on the market are just faster versions of the old tools. They execute tasks quickly but forget everything. They optimize for activity metrics (emails sent, tasks completed) rather than outcomes (revenue generated, relationships built).

Long horizon agents are different. They maintain a world model. They remember decisions and learn from outcomes. They coordinate across channels and stakeholders. They think in quarters, not minutes.

Building this architecture is harder than buying a simple tool. It requires real investment in data infrastructure, identity resolution, and decision logging. It takes time to accumulate enough outcomes to learn from.

But the companies that build it will have AI that actually compounds. That gets smarter every quarter instead of just faster. That can tell you not just what happened, but why, and what to do differently.

That's the difference between automation and intelligence.

Ready to see long horizon agents in action? Book a demo to see how Warmly's architecture handles persistent context, decision traces, and outcome attribution. Or explore our AI Signal Agent to see unified entity resolution powering real-time action.

Further Reading

The AI Infrastructure Trilogy

Warmly AI Agents:

Related Blog Posts:

Competitor Comparisons:

Product Deep Dives:

Pricing & Guides:

Last updated: January 2026

![6sense Review: Is It Worth It in 2026? [In-Depth]](https://cdn.prod.website-files.com/6506fc5785bd592c468835e0/67b613de9d586b53d1dec646_6sense_review.webp)

![Top 10 UnifyGTM Alternatives & Competitors [2026]](https://cdn.prod.website-files.com/6506fc5785bd592c468835e0/679677efb1c7a9e271d5e886_unifygtm_alternatives.webp)

![Top 10 Clearbit Alternatives & Competitors [2026]](https://cdn.prod.website-files.com/6506fc5785bd592c468835e0/67b06ff5d5fd5c7a90e04c36_8d361888-12cf-4d85-b20b-586974fd4055.png)